作者:whuanle

示例项目地址:https://github.com/whuanle/mcpdemo

Recently, the MCP protocol has gained immense popularity, with many developers diving into the development of MCP Server/Client. Various large companies have also launched their own MCP integration platforms or open MCP interfaces. Additionally, some readers have been discussing MCP technology in technical groups, and many are unclear about the mechanisms of MCP. Some articles explaining MCP are insufficiently clear and may even mislead the readers. Therefore, the author has written this note while learning about MCP over the weekend, aiming to provide more examples and explanations to help readers clarify the relationship between MCP and LLM, as well as how to actually implement and use MCP.

MCP Protocol

MCP protocol document address: https://modelcontextprotocol.io/introduction

Chinese version of the document: https://mcp-docs.cn/introduction

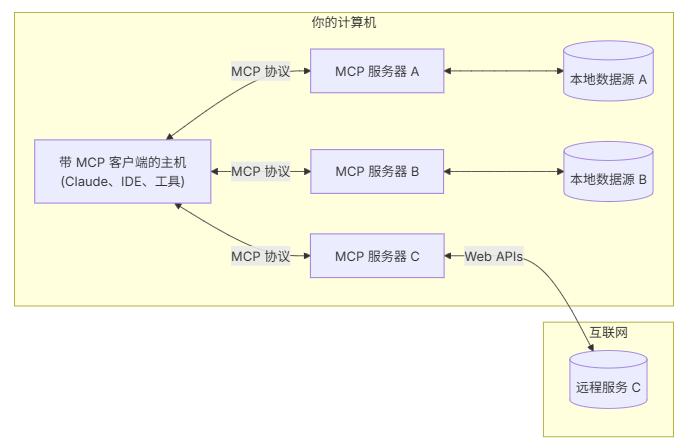

According to the MCP protocol, the following objects are defined within the MCP protocol:

- MCP Hosts: Programs like Claude Desktop, IDE, or AI tools that intend to access data via MCP;

- MCP Clients: Protocol clients that maintain a one-to-one connection with the server;

- MCP Servers: Lightweight programs that provide specific capabilities through the standard Model Context Protocol;

- Local Data Sources: Computer files, databases, and services that the MCP server can access securely;

- Remote Services: External systems on the internet that the MCP server can connect to (such as through APIs);

MCP Host is an AI application, typically a desktop program, that interacts with users. The MCP Host and MCP Client can be developed together, where the host interacts with the user and has the capability to directly call the MCP Server.

The MCP Server provides functionalities like Tool, resource content, prompts, conversation completion, etc. The functionalities or responsibilities of MCP Server are diverse; for example, the GaoDe Map MCP Server provides only the Tool, which is an interface call.

Local data sources and remote services are not directly related to the MCP itself but are part of the functionality implemented by the MCP Server, or can be seen as the foundational infrastructure and external dependencies supporting the MCP Server.

Due to the numerous concepts and functions of MCP, the author will explain the details step by step using case studies and projects. Readers are encouraged to download the example project repository and try writing code and running cases based on this tutorial.

Core Concepts

The MCP protocol defines the following functional modules:

- Resources

- Prompts

- Tools

- Sampling

- Roots

- Transports

Since there are not many cases for Roots and the C# SDK is not yet fully developed, this article will focus on the other functional modules.

The knowledge in this article does not linearly explain the above MCP functionalities.

Transport

Transport refers to the underlying mechanism for sending and receiving messages. MCP mainly includes two standard transport implementations:

- Standard Input/Output (stdio): The main focus is on local integration and command-line tools, using stdio for communication through standard input and output streams;

- Server-Sent Events (SSE): SSE transport achieves streaming communication from server to client through HTTP POST requests (long connections);

Of course, there is also Streamable, but due to its community support not being well-established, it will not be explained in this article.

Below is a brief description of the advantages, disadvantages, and differences among stdio, sse, and streamable in the MCP (Model Context Protocol):

stdio

-

Advantages:

- High platform compatibility:

stdio(standard input/output) is a low-level function of operating systems and is supported by almost all operating systems and programming languages. - Simple and direct: Used for inter-process communication, usually a communication method for scripts and command-line tools, and easy to implement.

- High platform compatibility:

-

Disadvantages:

- Lacks advanced features:

stdiocan only handle simple text and binary data streams, with no built-in message structure or format. - Not suitable for real-time interaction in network environments:

stdiois not flexible and reliable enough for network communication, typically used for local communication.

- Lacks advanced features:

sse

-

Advantages:

- Real-time updates: Allows the server to actively send update messages to the client via HTTP connections, suitable for real-time push applications.

- Easy implementation: Based on the HTTP protocol, requiring no complex transport layer protocols; clients can easily receive updates through the EventSource API.

- Lightweight: Compared to WebSocket, SSE is more lightweight and suitable for simple message-pushing scenarios.

-

Disadvantages:

- Unidirectional communication: Only the server can send messages to the client; if the client needs to send messages, it must do so via standard HTTP requests back to the server.

- Connection limitations: Browsers impose strict limits on the number of SSE connections, making it unsuitable for applications that require numerous connections.

streamable

-

Advantages:

- High efficiency: Can handle large or continuous data streams without waiting for the entire dataset to be transmitted.

- Good real-time performance: Allows for incremental transmission as data is generated, and incremental processing as data is consumed, improving real-time responsiveness.

- High flexibility: Supports long-term connections and transmissions, suitable for applications like video, audio, real-time database synchronization, etc.

-

Disadvantages:

- High complexity: Implementing and managing streaming transmission protocols and handling the logic for data streams are complex; ensuring the order and integrity of the data is crucial.

- Resource consumption: Long-term connections and ongoing data transmission may consume considerable server and network resources, requiring optimization.

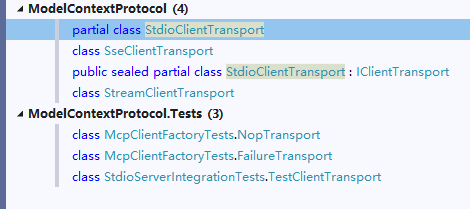

The ModelContextProtocol CSharp provides three types of Transport, with core code found in the following three classes:

- StdioClientTransport

- SseClientTransport

- StreamClientTransport

The author will now detail the stdio and sse transport methods.

stdio

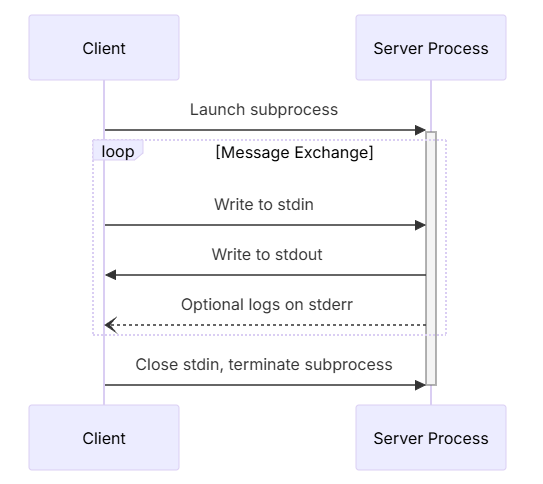

This is achieved through local inter-process communication, where the client launches the MCP Server program as a child process, and both parties exchange JSON-RPC messages via stdin/stdout, with each message separated by a newline character.

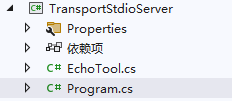

The example project for this section references TransportStdioServer and TransportStdioClient.

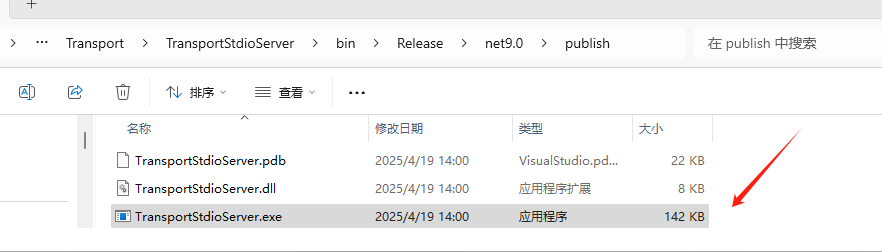

When using stdio, the McpServer only needs to implement static methods and configure attribute annotations, after which the program must be compiled into a .exe.

TransportStdioServer adds a Tool:

The explanation for Tool will follow; it is skipped for now.

[McpServerToolType]

public class EchoTool

{

[McpServerTool, Description("Echoes the message back to the client.")]

public static string Echo(string message) => $"hello {message}";

}Then create an MCP Server service and expose interface capabilities using WithStdioServerTransport().

using Microsoft.Extensions.DependencyInjection;

using Microsoft.Extensions.Hosting;

using Microsoft.Extensions.Logging;

using TransportStdioServer;

var builder = Host.CreateApplicationBuilder(args);

builder.Services.AddMcpServer()

.WithStdioServerTransport()

.WithTools<EchoTool>();

builder.Logging.AddConsole(options =>

{

options.LogToStandardErrorThreshold = LogLevel.Trace;

});

await builder.Build().RunAsync();Compile the TransportStdioServer project, which will generate a .exe file on Windows. Copy the absolute path of the .exe file to use when writing the Client.

When writing the Client in C#, the .exe file should be imported through command-line arguments, as shown below:

using Microsoft.Extensions.Configuration;

using Microsoft.Extensions.Hosting;

using ModelContextProtocol.Client;

using ModelContextProtocol.Protocol.Transport;

var builder = Host.CreateApplicationBuilder(args);

builder.Configuration

.AddEnvironmentVariables()

.AddUserSecrets<Program>();

var clientTransport = new StdioClientTransport(new()

{

Name = "Demo Server",

// Absolute path must be used, omitted here

Command = "E:/../../TransportStdioServer.exe"

});

await using var mcpClient = await McpClientFactory.CreateAsync(clientTransport);

var tools = await mcpClient.ListToolsAsync();

foreach (var tool in tools)

{

Console.WriteLine($"Connected to server with tools: {tool.Name}");

}Start the TransportStdioClient, and the console will print all Mcp tools in TransportStdioServer.

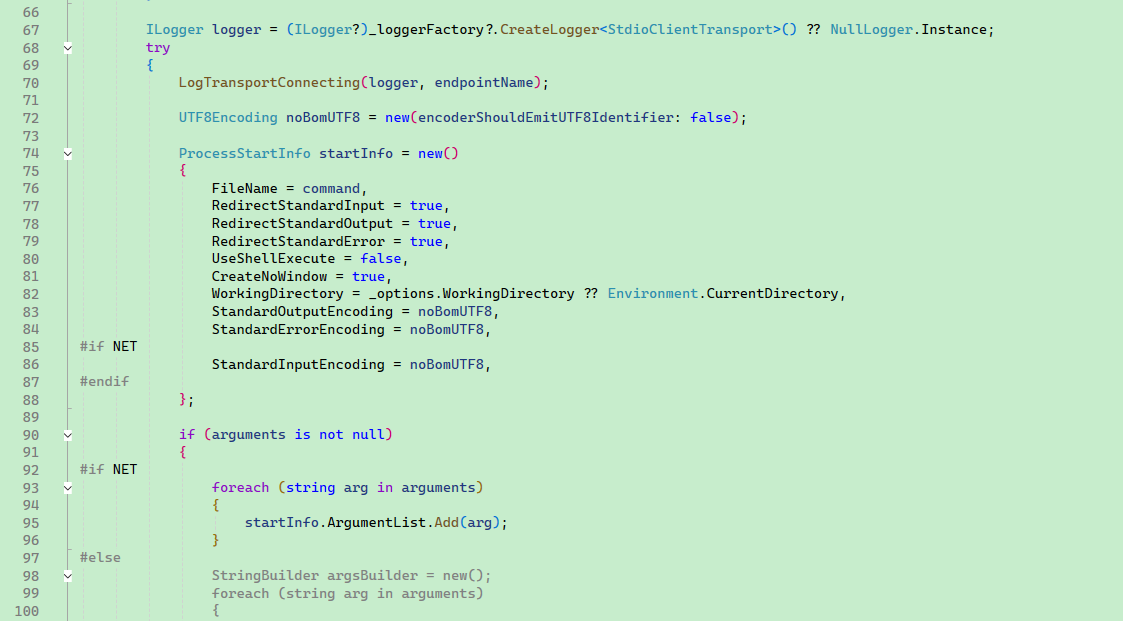

The principle of StdioClientTransport is to start TransportStdioServer based on the command line parameters. The StdioClient will concatenate the command line parameters and then start the MCP Server as a child process. The command line example looks like this:

cmd.exe/c E:/../TransportStdioServer.exeThe core code for StdioClientTransport to start the child process:

SSE

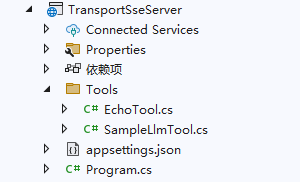

This section references example projects: TransportSseServer, TransportSseClient.

SSE is implemented through HTTP long connections for remote communication. In various AI dialogue applications, the AI outputs characters one by one like a typewriter. This method, where content is continuously pushed by the HTTP server through a long HTTP connection, is called SSE.

The SSE Server must provide two endpoints:

- /sse (GET request): Establish a long connection to receive event streams pushed by the server.

- /messages (POST request): The client sends requests to this endpoint.

In TransportSseServer, a simple EchoTool is implemented.

[McpServerToolType]

public sealed class EchoTool

{

[McpServerTool, Description("Echoes the input back to the client.")]

public static string Echo(string message)

{

return "hello " + message;

}

}Configure the MCP Server to support SSE:

using TransportSseServer.Tools;

var builder = WebApplication.CreateBuilder(args);

builder.Services.AddMcpServer()

.WithHttpTransport()

.WithTools<EchoTool>()

.WithTools<SampleLlmTool>();

var app = builder.Build();

app.MapMcp();

app.Run("http://0.0.0.0:5000");The TransportSseClient implements a client connecting to the MCP Server, and its code is very simple. After connecting to the MCP Server, it lists the supported Tools.

using Microsoft.Extensions.Logging;

using Microsoft.Extensions.Logging.Abstractions;

using ModelContextProtocol.Client;

using ModelContextProtocol.Protocol.Transport;

var defaultOptions = new McpClientOptions

{

ClientInfo = new() { Name = "IntegrationTestClient", Version = "1.0.0" }

};

var defaultConfig = new SseClientTransportOptions

{

Endpoint = new Uri($"http://localhost:5000/sse"),

Name = "Everything",

};

// Create client and run tests

await using var client = await McpClientFactory.CreateAsync(

new SseClientTransport(defaultConfig),

defaultOptions,

loggerFactory: NullLoggerFactory.Instance);

var tools = await client.ListToolsAsync();

foreach (var tool in tools)

{

Console.WriteLine($"Connected to server with tools: {tool.Name}");

}Streamable

- Streamable HTTP is an upgraded version of SSE, entirely based on the standard HTTP protocol, removing the need for a dedicated SSE endpoint. All messages are transmitted via the /message endpoint.

This section will not cover Streamable.

MCP Tool Explanation

Currently, there are two mainstream LLM development frameworks in the community: Microsoft.SemanticKernel and LangChain. Both support Plugins, which can convert local functions, Swagger, etc., into functions that are submitted to the LLM. After the AI returns the function to be called, the framework engine implements dynamic calls, and this functionality is called Function call.

Note that MCP has many functionalities, one of which is called MCP Tool, which can be seen as something with similar functionality to Plugin.

MCP Tool corresponds to Plugin, but MCP encompasses more than just the Tool feature.

However, each LLM framework has different implementation methods for Plugins, and their usage and implementation mechanisms are deeply tied to language characteristics, making it impossible to achieve cross-service and cross-platform usability. Thus, MCP Tool was created—an equivalent functionality to Plugin, intended to provide Function in a standardized way. However, MCP has a unified protocol standard, making it language-agnostic and platform-agnostic. It is important to note, though, that MCP does not completely replace Plugin; Plugin still has significant utility.

Both MCP Tool and Plugin ultimately convert to Function calls, leading many to confuse MCP, MCP Tool, and Function call. It is essential to recognize that MCP Tool corresponds to Plugin, while both are aimed at converting to Function for AI usage.

MCP Tool

Using TransportSseClient as an example, if you want to call a Tool of TransportSseServer in the Client, you need to specify the Tool name and parameters.

Later, there will be a discussion on how to provide MCP Tool to the AI model through SK.

var echoTool = tools.First(x => x.Name == "Echo");

var result = await client.CallToolAsync("Echo", new Dictionary<string, object?>

{

{ "message", "whuanle" }

});

foreach (var item in result.Content)

{

Console.WriteLine($"type: {item.Type}, text: {item.Text}");

}Now let's review how the MCP Server provides the Tool.

First, the server defines the Tool class and functions.

[McpServerToolType]

public sealed class EchoTool

{

[McpServerTool, Description("Echoes the input back to the client.")]

public static string Echo(string message)

{

return "hello " + message;

}

}MCP Server can expose tools in the following two ways:

// Directly specify Tool class

builder.Services

.AddMcpServer()

.WithHttpTransport()

.WithTools<EchoTool>()

.WithTools<SampleLlmTool>();

// Scan assembly

builder.Services

.AddMcpServer()

.WithHttpTransport()

.WithStdioServerTransport()

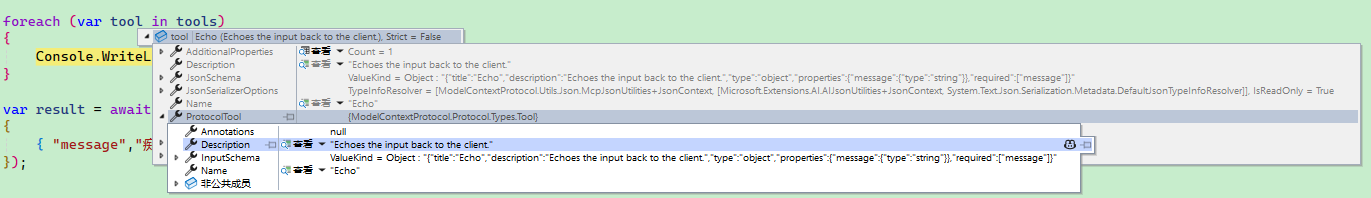

.WithToolsFromAssembly();When the client recognizes the Tool list on the server side, it can use McpClientTool.ProtocolTool.InputSchema to obtain the input parameter format for the tool:

An example of its content format is as follows:

Annotations: null

Description: "Echoes the input back to the client."

Name: "Echo"

InputSchema: "{\"title\":\"Echo\",\"description\":\"Echoes the input back to the client.\",\"type\":\"object\",\"properties\":{\"message\":{\"type\":\"string\"}},\"required\":[\"message\"]}"[McpServerToolType] is used to attribute types containing methods that should be exposed as ModelContextProtocol.Server.McpServerTools.

[McpServerTool] is used to indicate that the method should be treated as a ModelContextProtocol.Server.McpServerTool.

[Description] is used to add comments.

Dependency Injection

When implementing Tool functions, the server can achieve dependency injection through function implementation.

Refer to the sample projects InjectServer, InjectClient.

Add a service class and register it in the container.

public class MyService

{

public string Echo(string message)

{

return "hello " + message;

}

}builder.Services.AddScoped<MyService>();Inject this service in the Tool function:

[McpServerToolType]

public sealed class MyTool

{

[McpServerTool, Description("Echoes the input back to the client.")]

public static string Echo(MyService myService, string message)

{

return myService.Echo(message);

}

}Submit MCP Tool to AI Dialogue

As mentioned earlier, both MCP Tool and Plugin are means of implementing Function calls. The main process when using Tool in AI dialogue is as follows:

When you ask a question:

- The client sends your question to the LLM;

- The LLM analyzes the available tools and decides which tools to use;

- The client executes the selected tool through the MCP server;

- The result is sent back to the LLM;

- The LLM formulates a natural language response;

- The response is displayed to you;

This process may not happen just once or twice; it may occur multiple times. The specific details will be discussed in Gaode Map MCP Practical Application; it is only briefly mentioned here.

Pseudo code for submitting Tool to dialogue context:

// Get available functions.

IList<McpClientTool> tools = await client.ListToolsAsync();

// Call the chat client using the tools.

IChatClient chatClient = ...;

var response = await chatClient.GetResponseAsync(

"your prompt here",

new() { Tools = [.. tools] },Gaode Map MCP Practical Application

After discussing for so long, we finally arrive at the practical integration stage. This section will explain the logical details and integration usage of MCP Tool through the Gaode Map case.

Code reference sample project amap.

The functionalities currently provided by the Gaode Map MCP Server primarily include:

- Geocoding

- Reverse geocoding

- IP localization

- Weather query

- Bicycling route planning

- Walking route planning

- Driving route planning

- Public transport route planning

- Distance measurement

- Keyword search

- Nearby search

- Detail search

The names of its tools are as follows:

maps_direction_bicycling

maps_direction_driving

maps_direction_transit_integrated

maps_direction_walking

maps_distance

maps_geo

maps_regeocode

maps_ip_location

maps_around_search

maps_search_detail

maps_text_search

maps_weatherGaode Map provides developers with a free quota daily, so there is no need to worry about costs while performing this experiment.

Go to https://console.amap.com/dev/key/app to create a new application and copy the application key.

Gaode MCP server address:

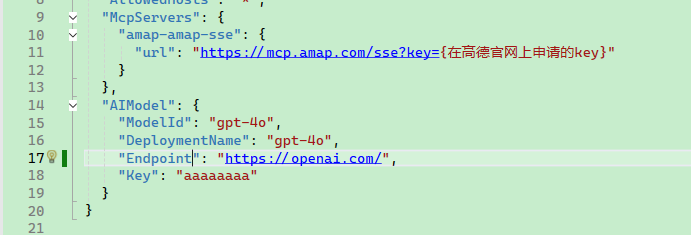

https://mcp.amap.com/sse?key={Your Key Applied from Gaode Official Website}In the appsettings.json of the amap project, add the following JSON and replace certain parameters:

Note: Except for the gpt-4o model, other models registering Function calls can also be used.

"McpServers": {

"amap-amap-sse": {

"url": "https://mcp.amap.com/sse?key={Your Key Applied from Gaode Official Website}"

}

},

"AIModel": {

"ModelId": "gpt-4o",

"DeploymentName": "gpt-4o",

"Endpoint": "https://openai.com/",

"Key": "aaaaaaaa"

}

Import the configuration and create a logger:

var configuration = new ConfigurationBuilder()

.AddJsonFile("appsettings.json")

.AddJsonFile("appsettings.Development.json")

.Build();

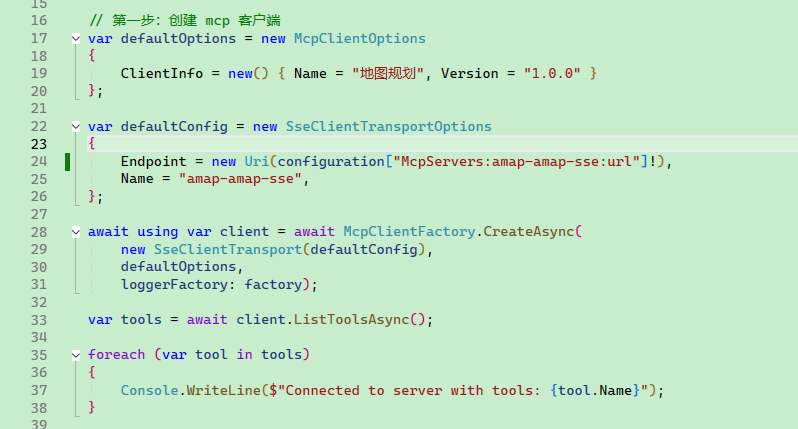

using ILoggerFactory factory = LoggerFactory.Create(builder => builder.AddConsole());Step One: Create MCP Client

Connect to Gaode MCP Server and obtain the Tool list.

var defaultOptions = new McpClientOptions

{

ClientInfo = new() { Name = "Map Planning", Version = "1.0.0" }

};

var defaultConfig = new SseClientTransportOptions

{

Endpoint = new Uri(configuration["McpServers:amap-amap-sse:url"]!),

Name = "amap-amap-sse",

};

await using var client = await McpClientFactory.CreateAsync(

new SseClientTransport(defaultConfig),

defaultOptions,

loggerFactory: factory);

var tools = await client.ListToolsAsync();

foreach (var tool in tools)

{

Console.WriteLine($"Connected to server with tools: {tool.Name}");

}

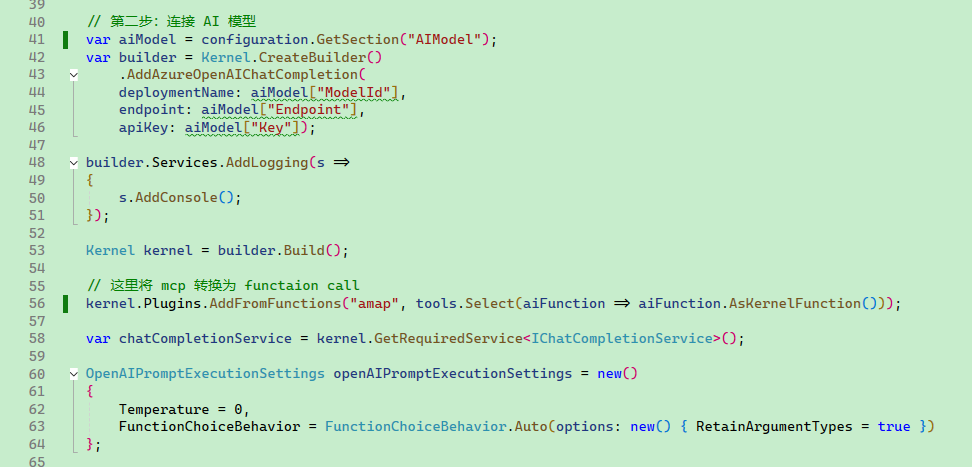

Step Two: Connect AI Model and Configure MCP

Use the SemanticKernel framework to integrate the LLM, converting MCP Tool into a Function added to the dialogue context.

var aiModel = configuration.GetSection("AIModel");

var builder = Kernel.CreateBuilder()

.AddAzureOpenAIChatCompletion(

deploymentName: aiModel["ModelId"],

endpoint: aiModel["Endpoint"],

apiKey: aiModel["Key"]);

builder.Services.AddLogging(s =>

{

s.AddConsole();

});

Kernel kernel = builder.Build();

// Here convert mcp to function call

kernel.Plugins.AddFromFunctions("amap", tools.Select(aiFunction => aiFunction.AsKernelFunction()));

var chatCompletionService = kernel.GetRequiredService<IChatCompletionService>();

OpenAIPromptExecutionSettings openAIPromptExecutionSettings = new()

{

Temperature = 0,

FunctionChoiceBehavior = FunctionChoiceBehavior.Auto(options: new() { RetainArgumentTypes = true })

};

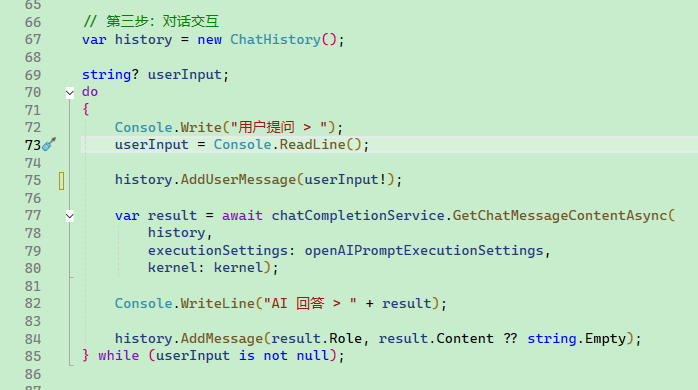

Step Three: Dialogue Interaction

Write console code to interact with the user.

var history = new ChatHistory();

string? userInput;

do

{

Console.Write("User Question > ");

userInput = Console.ReadLine();

history.AddUserMessage(userInput!);

var result = await chatCompletionService.GetChatMessageContentAsync(

history,

executionSettings: openAIPromptExecutionSettings,

kernel: kernel);

Console.WriteLine("AI Response > " + result);

history.AddMessage(result.Role, result.Content ?? string.Empty);

} while (userInput is not null);

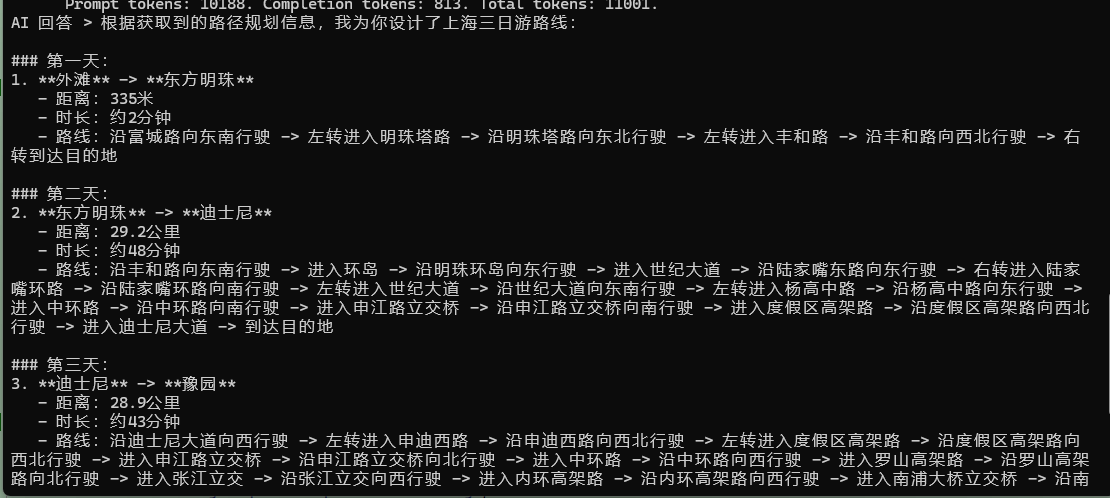

Demonstration of Map Planning

Note that due to the free quota limits of Gaode Map, and the fact that there may be multiple requests to the MCP Server during AI dialogue, the effect may not always be optimal.

1. Smart Travel Route Planning

It supports route planning with up to 16 waypoints, automatically calculates the optimal order, and provides a visual map link.

Usage example:

Please help me plan a three-day trip in Shanghai, including the Bund, Oriental Pearl, Disneyland, Yuyuan Garden, and Nanjing Road, and provide a visual map

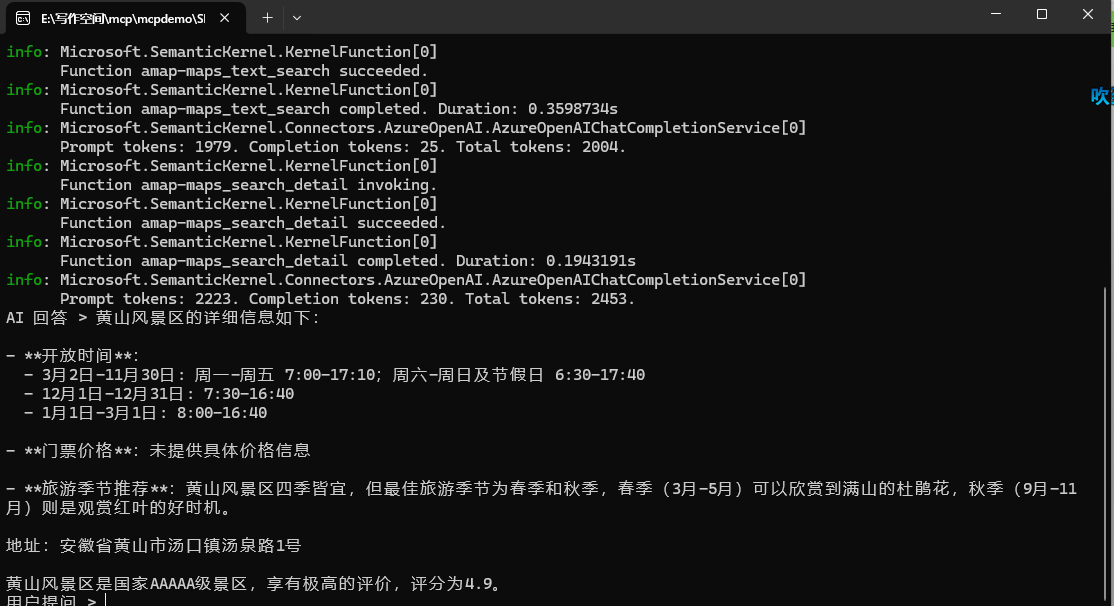

2. Attraction Search and Detail Query

Query detailed information about attractions, including ratings, opening hours, ticket prices, and more.

Usage example:

Please inquire about the opening hours, ticket prices, and recommended tourism seasons for Huangshan Scenic Area

How AI Recognizes MCP Calls

In the Gaode Map planning code, there is a section that converts the MCP server interface into Function, as shown below:

kernel.Plugins

.AddFromFunctions("amap", tools.Select(aiFunction => aiFunction.AsKernelFunction()))Here, it can actually be concluded that it is not the AI model directly calling the MCP Server; it is still the Client performing the Function call.

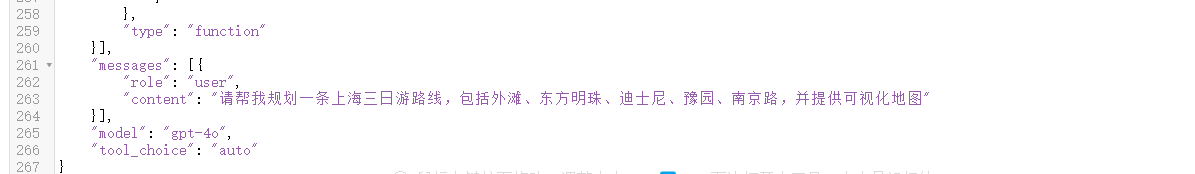

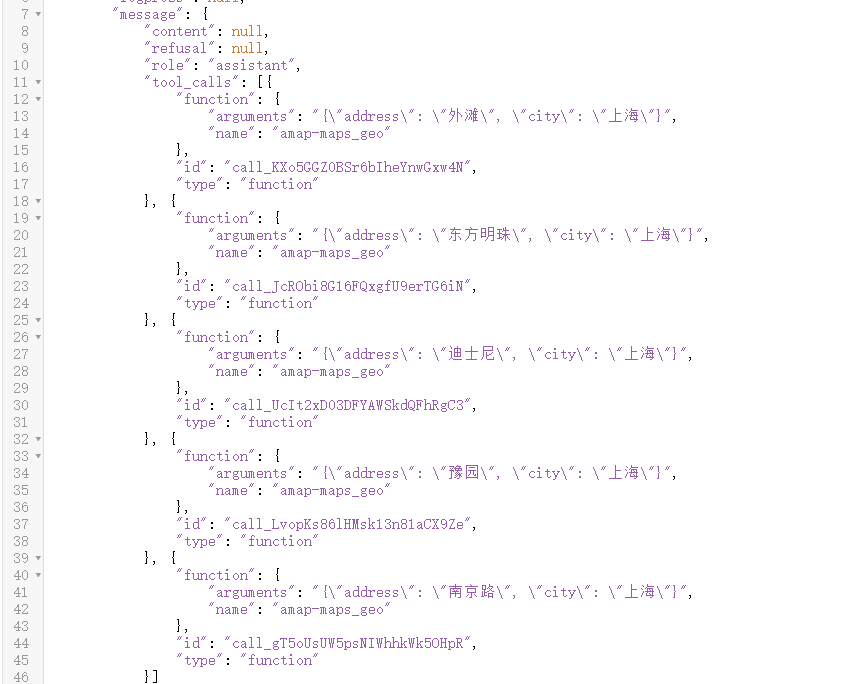

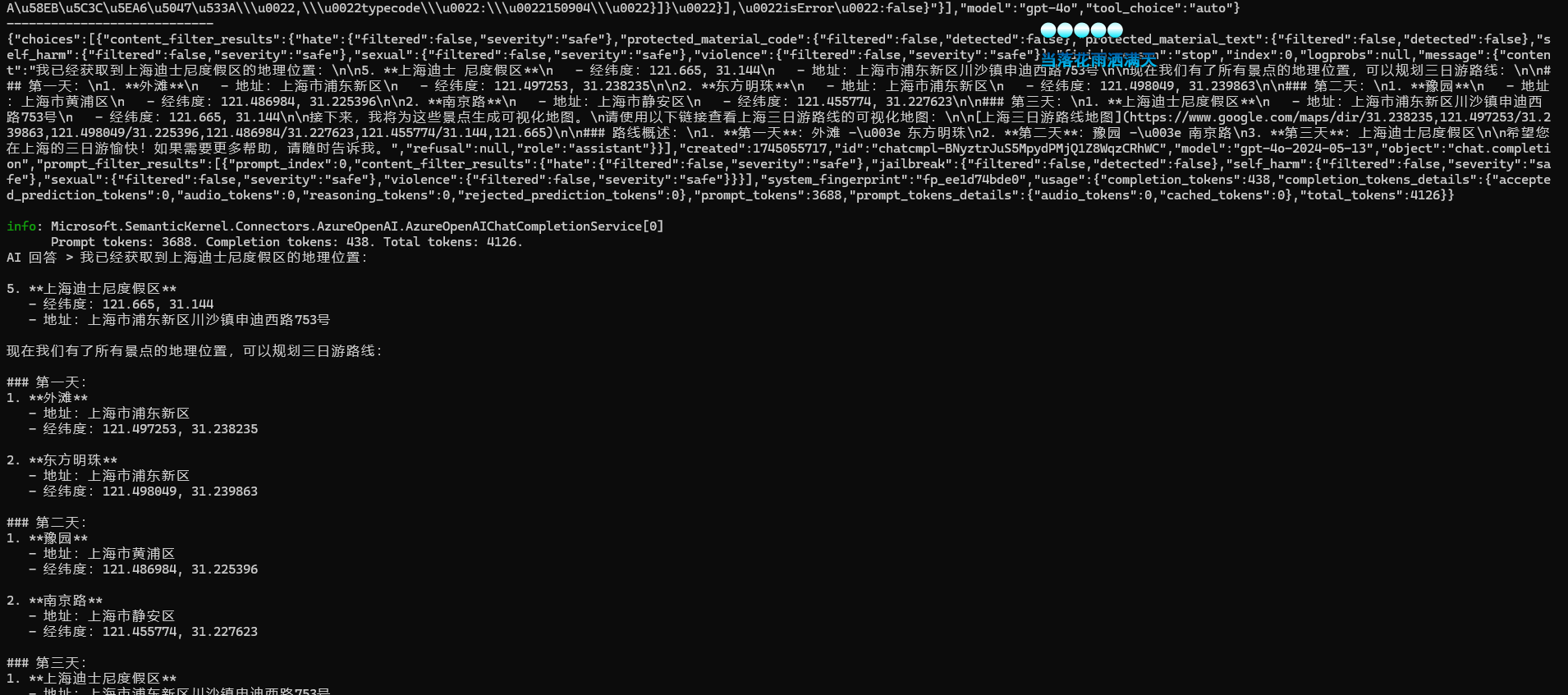

By intercepting HTTP requests, it can be found that when the user inputs Please help me plan a three-day trip in Shanghai, including the Bund, Oriental Pearl, Disneyland, Yuyuan Garden, and Nanjing Road, and provide a visual map, the client first sends the user's question along with the function calls provided by the MCP service to the AI model server.

During the dialogue, the list of Functions (MCP Tools) provided to the LLM by the Client.

Then, the AI specifies the function call steps and parameters, after which the Client implements locating the MCP Server and sequentially calls each Tool.

The LLM returns a list of Functions to be called in sequence and the parameters:

The Client submits the execution results of each Function along with the user's question and other information again to the AI model server.

Due to concurrency limits of Gaode interfaces, some interface calls may fail, and therefore the Client may have to request multiple times before finally outputting the AI's response.

Up to this point, the reader should understand the relationship between MCP Tool, Plugin, and Function Call!

Implementing MCP Server

Earlier, I introduced MCP Tool, but the MCP Server can also provide many useful functions. The MCP protocol defines the following core modules:

- Core architecture

- Resources

- Prompts

- Tools

- Sampling

- Roots

- Transports

As one of the most focused Tools in the current community, this article has introduced it separately, and now I will continue to explain the other functional modules.

Implementing Resources

Sample project references: ResourceServer, ResourceClient.

Definition of Resources: Resources are a core primitive in Model Context Protocol (MCP) that allow the server to expose data and content that can be read by clients and used as LLM interaction context.

Resources represent any type of data that the MCP server wants to provide to clients. For usage, the MCP Server can define a URI for each type of resource, where the URI protocol format can be virtual; what matters is that it is a URI string that can locate the resource.

Readers may not understand what this means just from the definition; don't worry, you will know when you start working on it.

Resources can include:

- File contents

- Database records

- API responses

- Real-time system data

- Screenshots and images

- Log files

- And more

Each resource is identified by a unique URI and can contain either text or binary data.

Resources are identified using URIs in the following format:

[protocol]://[host]/[path]For example:

file:///home/user/documents/report.pdfpostgres://database/customers/schemascreen://localhost/display1

The file types of Resources mainly cover text resources and binary resources.

Text Resources

Text resources contain UTF-8 encoded text data. These are suitable for:

- Source code

- Configuration files

- Log files

- JSON/XML data

- Plain text

Binary Resources

Binary resources contain raw binary data encoded in base64.

<br />

这些适用于:

- Images

- PDFs

- Audio files

- Video files

- Other non-text formats

<br />

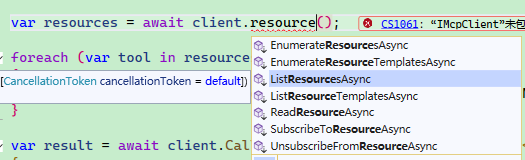

#### Resources Server & Client Implementation

When the client uses the Resources service, there are the following APIs. In this section, we will focus on how to implement the corresponding functionalities in the service side based on these interfaces.

<br />

When implementing Resources, there are mainly two ways to provide Resources: one is to dynamically provide Resource Uri format through templates, and the other is to directly provide specific Resource Uri.

Example Resource Uri format:

"test://static/resource/{README.txt}"

<br />

The Resource Uri format provided by MCP Server can be freely customized. These Uris are not directly read by the Client. When the Client needs to read Resources, it sends the Uri to the MCP Server, which parses the Uri and locates the corresponding resource, then returns the resource content to the Client.

In other words, the protocol of this Uri is essentially just a string, as long as it can be used between the current MCP Server and Client.

<br />

MCP Server can provide a certain type of resource through templates, where the addresses of these resources are dynamic and need to be obtained in real-time based on the id.

```csharp

builder.Services.AddMcpServer()

.WithListResourceTemplatesHandler(async (ctx, ct) =>

{

return new ListResourceTemplatesResult

{

ResourceTemplates =

[

new ResourceTemplate { Name = "Static Resource", Description = "A static resource with a numeric ID", UriTemplate = "test://static/resource/{id}" }

]

};

});For resources with fixed addresses, they can be exposed via this method. For example, if there is a file that must be read, the address only needs to be fixed.

builder.Services.AddMcpServer()

.WithListResourcesHandler(async (ctx, ct) =>

{

await Task.CompletedTask;

var readmeResource = new Resource

{

Uri = "test://static/resource/README.txt",

Name = "Resource README.txt",

MimeType = "application/octet-stream",

Description = Convert.ToBase64String(Encoding.UTF8.GetBytes("这是一个必读文件"))

};

return new ListResourcesResult

{

Resources = new List<Resource>

{

readmeResource

}

};

});Client reads resource templates and static resource lists:

var defaultOptions = new McpClientOptions

{

ClientInfo = new() { Name = "ResourceClient", Version = "1.0.0" }

};

var defaultConfig = new SseClientTransportOptions

{

Endpoint = new Uri($"http://localhost:5000/sse"),

Name = "Everything",

};

// Create client and run tests

await using var client = await McpClientFactory.CreateAsync(

new SseClientTransport(defaultConfig),

defaultOptions,

loggerFactory: NullLoggerFactory.Instance);

var resourceTemplates = await client.ListResourceTemplatesAsync();

var resources = await client.ListResourcesAsync();

foreach (var template in resourceTemplates)

{

Console.WriteLine($"Connected to server with resource templates: {template.Name}");

}

foreach (var resource in resources)

{

Console.WriteLine($"Connected to server with resources: {resource.Name}");

}Thus, if the client needs to read resources from the MCP server, it just needs to pass the Resource Uri.

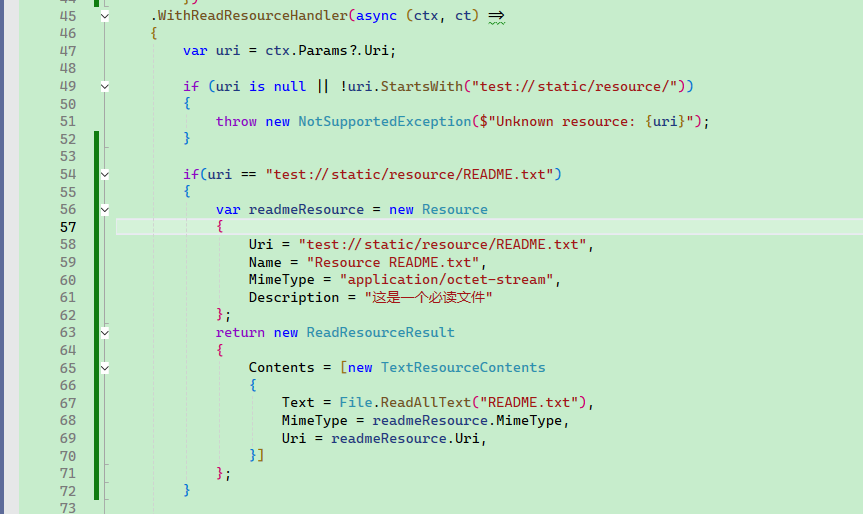

var readmeResource = await client.ReadResourceAsync(resources.First().Uri);This section only introduces the Resource Uri provided by the MCP Server. So how does the MCP Server handle requests when the Client wants to retrieve the content of a certain Resource Uri?

ModelContextProtocol CSharp currently offers two implementations:

-

TextResourceContents

-

BlobResourceContents

For example, when the Client accesses test://static/resource/README.txt, it can return the README.txt file directly as text:

.WithReadResourceHandler(async (ctx, ct) =>

{

var uri = ctx.Params?.Uri;

if (uri is null || !uri.StartsWith("test://static/resource/"))

{

throw new NotSupportedException($"Unknown resource: {uri}");

}

if(uri == "test://static/resource/README.txt")

{

var readmeResource = new Resource

{

Uri = "test://static/resource/README.txt",

Name = "Resource README.txt",

MimeType = "application/octet-stream",

Description = "这是一个必读文件"

};

return new ReadResourceResult

{

Contents = [new TextResourceContents

{

Text = File.ReadAllText("README.txt"),

MimeType = readmeResource.MimeType,

Uri = readmeResource.Uri,

}]

};

}

})

If the Client accesses another Resource, it is returned in binary form:

.WithReadResourceHandler(async (ctx, ct) =>

{

var uri = ctx.Params?.Uri;

if (uri is null || !uri.StartsWith("test://static/resource/"))

{

throw new NotSupportedException($"Unknown resource: {uri}");

}

int index = int.Parse(uri["test://static/resource/".Length..]) - 1;

if (index < 0 || index >= ResourceGenerator.Resources.Count)

{

throw new NotSupportedException($"Unknown resource: {uri}");

}

var resource = ResourceGenerator.Resources[index];

return new ReadResourceResult

{

Contents = [new TextResourceContents

{

Text = resource.Description!,

MimeType = resource.MimeType,

Uri = resource.Uri,

}]

};

})

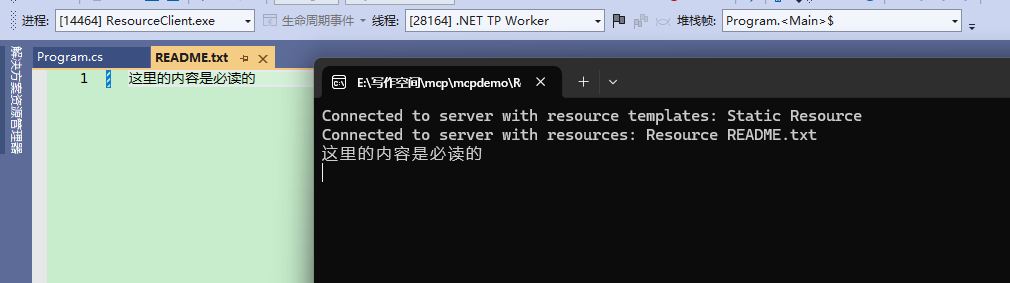

Here is how the client reads "test://static/resource/README.txt" example:

var readmeResource = await client.ReadResourceAsync(resources.First().Uri);

var textContent = readmeResource.Contents.First() as TextResourceContents;

Console.WriteLine(textContent.Text);

Resource Subscription

Clients can subscribe to updates for specific resources:

- The Client sends

resources/subscribeusing the resource URI. - When the resource changes, the server sends

notifications/resources/updated. - The Client can use

resources/readto get the latest content. - The Client can use

resources/unsubscribeto cancel the subscription.

In general, the MCP Server needs to implement the factory pattern to dynamically keep track of which Resource Uris are subscribed. Only when these Uris' resources change does the server send updates; otherwise, even if changes occur, it is unnecessary to push updates.

However, currently, only WithStdioServerTransport() is effective; the author has failed the experiment with WithHttpTransport().

.WithSubscribeToResourcesHandler(async (ctx, ct) =>

{

var uri = ctx.Params?.Uri;

if (uri is not null)

{

subscriptions.Add(uri);

}

return new EmptyResult();

})

.WithUnsubscribeFromResourcesHandler(async (ctx, ct) =>

{

var uri = ctx.Params?.Uri;

if (uri is not null)

{

subscriptions.Remove(uri);

}

return new EmptyResult();

});For example, we could have an interface that manually triggers an update for the Client that has subscribed to "test://static/resource/README.txt".

await _mcpServer.SendNotificationAsync("notifications/resource/updated",

new

{

Uri = "test://static/resource/README.txt",

});

return "已通知";The client only needs very simple code to subscribe.

client.RegisterNotificationHandler("notifications/resource/updated", async (message, ctx) =>

{

await Task.CompletedTask;

// Callback

});

await client.SubscribeToResourceAsync("test://static/resource/README.txt");Best Practices

When implementing resource support:

- Use clear and descriptive resource names and URIs.

- Include useful descriptions to guide LLM understanding.

- Set appropriate MIME types when known.

- Implement resource templates for dynamic content.

- Use subscriptions for frequently changed resources.

- Handle errors gracefully with clear error messages.

- Consider paginating large resource lists.

- Cache resource contents when appropriate.

- Validate URIs before processing.

- Document your custom URI schemes.

Security Considerations

When exposing resources:

- Validate all resource Uris.

- Implement appropriate access controls.

- Sanitize file paths to prevent directory traversal.

- Handle binary data with caution.

- Consider rate limiting resource reads.

- Audit resource access.

- Encrypt sensitive data in transit.

- Validate MIME types.

- Implement timeouts for long-running read operations.

- Appropriately handle resource cleanup.

Implementing Prompts

The purpose of Prompts is to create reusable prompt templates and workflows. MCP Server Prompts allow servers to define reusable prompt templates and workflows that clients can easily present to users and LLMs. They provide a powerful way to standardize and share common LLM interactions.

Example projects refer to PromptsServer, PromptsClient.

Prompts in MCP are predefined templates that can:

- Accept dynamic parameters.

- Include context from resources.

- Link multiple interactions.

- Guide specific workflows.

- Present as UI elements (like slash commands).

MCP Server Example:

[McpServerPromptType]

public static class MyPrompts

{

[McpServerPrompt, Description("Creates a prompt to summarize the provided message.")]

public static ChatMessage Summarize([Description("The content to summarize")] string content) =>

new(ChatRole.User, $"Please summarize this content into a single sentence: {content}");

}According to examples from the official framework repository, there are primarily two ways to use Prompts.

The first way is to return a string directly.

[McpServerPromptType]

public class SimplePromptType

{

[McpServerPrompt(Name = "simple_prompt"), Description("A prompt without arguments")]

public static string SimplePrompt() => "This is a simple prompt without arguments.";

}The second way is to orchestrate the dialogue context and then return.

[McpServerPromptType]

public class ComplexPromptType

{

[McpServerPrompt(Name = "complex_prompt"), Description("A prompt with arguments")]

public static IEnumerable<ChatMessage> ComplexPrompt(

[Description("Temperature setting")] int temperature,

[Description("Output style")] string? style = null)

{

return [

new ChatMessage(ChatRole.User,$"This is a complex prompt with arguments: temperature={temperature}, style={style}"),

new ChatMessage(ChatRole.Assistant, "I understand. You've provided a complex prompt with temperature and style arguments. How would you like me to proceed?"),

new ChatMessage(ChatRole.User, [new DataContent(Convert.ToBase64String(File.ReadAllBytes("img.png")))])

];

}

}

The client can retrieve the list of prompts provided by the MCP server.

var prompts = await client.ListPromptsAsync();

foreach (var item in prompts)

{

Console.WriteLine($"prompt name :{item.Name}");

}

The client can automatically load the required prompts into the current AI conversation context.

var result = await prompts.First(x => x.Name == "test").GetAsync(new Dictionary<string, object?>() { ["message"] = "hello" });

IList<ChatMessage> chatMessages = result.ToChatMessages();Best Practices

When implementing prompts:

- Use clear, descriptive prompt names

- Provide detailed descriptions for prompts and parameters

- Validate all required parameters

- Handle missing parameters gracefully

- Consider version control of prompt templates

- Cache dynamic content when appropriate

- Implement error handling

- Document expected parameter formats

- Consider the composability of prompts

- Test prompts with various inputs

UI Integration

Prompts can be presented in the client UI as:

- Slash commands

- Quick actions

- Context menu items

- Command panel entries

- Guided workflows

- Interactive forms

Implementing Sampling

Sampling is a powerful MCP feature that allows servers to request LLM completions from clients, enabling complex agentic behaviors while maintaining security and privacy.

The sampling process follows these steps:

- The server sends a

sampling/createMessagerequest to the client - The client reviews the request and can modify it

- The client samples from the LLM

- The client reviews the completion results

- The client returns the results to the server

This human-computer interaction design ensures that users can control the content that the LLM sees and generates.

In the author's understanding, sampling is suitable for AI Agent applications. After the server commands the client, the client uses the LLM to accomplish tasks and returns the results to the server.

However, as it stands, the ModelContextProtocol C# may lack this capability, since IMcpServer can only exist in the context of a client request to the server, and the server cannot arbitrarily find the client or issue tasks to it by injecting IMcpServer.

For a stdio-based MCP server, sampling can be implemented as follows.

await ctx.Server.RequestSamplingAsync([

new ChatMessage(ChatRole.System, "You are a helpful test server"),

new ChatMessage(ChatRole.User, $"Resource {uri}, context: A new subscription was started"),

],For an HTTP-based MCP server, since it cannot call the client, further elaboration is not necessary.

文章评论