This chapter mainly discusses atomic operations under multithreaded competition.

Knowledge Points

Race Conditions

A race condition occurs when two or more threads access shared data and try to change it simultaneously. The part of the shared data they depend on is called the race condition.

Data race is a type of race condition; a race condition may lead to memory (data) corruption or unpredictable behavior.

Thread Synchronization

If there are N threads executing a certain operation, while one thread is executing that operation, the others must wait in turn. This is thread synchronization.

Race conditions in a multithreaded environment typically result from incorrect synchronization.

CPU Time Slices and Context Switching

A time slice (timeslice) is a microscopically allocated CPU time for each running process by the operating system.

Please refer to: https://zh.wikipedia.org/wiki/时间片

Context Switching, also called process switching or task switching, refers to the CPU switching from one process or thread to another.

Please refer to: https://zh.wikipedia.org/wiki/上下文交換

Blocking

Blocking state means the thread is in a waiting state. When a thread is in a blocked state, it occupies as little CPU time as possible.

When a thread changes from a running state (Running) to a blocked state (WaitSleepJoin), the operating system allocates the CPU time slice occupied by this thread to other threads. When the thread resumes running (Running), the operating system reassigns the CPU time slice.

When assigning CPU time slices, context switching occurs.

Kernel Mode and User Mode

Only the operating system can switch or suspend threads, so blocking threads is handled by the operating system; this is called kernel mode.

Sleep(), Join(), etc., use kernel mode to block threads for thread synchronization (waiting).

If a thread only needs to wait for a very short time, the cost of context switching caused by blocking the thread can be substantial, in which case we can use spinning to achieve thread synchronization; this method is called user mode.

Interlocked Class

Provides atomic operations for variables shared by multiple threads.

Using the Interlocked class, you can avoid race conditions without blocking threads (lock, Monitor).

The Interlocked class is static. Let us first look at the commonly used methods of Interlocked:

| Method | Function |

|-----------------------|-----------------------------------------------------------|

| CompareExchange() | Compares two values for equality and, if they are equal, replaces the first value. |

| Decrement() | Decrements the value of the specified variable atomically and stores the result. |

| Exchange() | Sets the specified value atomically and returns the original value. |

| Increment() | Increments the value of the specified variable atomically and stores the result. |

| Add() | Adds two numbers and replaces the first integer with the sum; this operation is done atomically. |

| Read() | Returns a value that is loaded atomically. |

For all methods, please see: https://docs.microsoft.com/zh-cn/dotnet/api/system.threading.interlocked?view=netcore-3.1#methods

1. Issues

Issue:

In C#, assignment and some simple mathematical operations are not atomic operations, influenced by the multithreading environment, issues may arise.

We can use lock and Monitor to resolve these issues, but is there a simpler way?

First, we write the following code:

private static int sum = 0;

public static void AddOne()

{

for (int i = 0; i

This method will increment sum by 100 when it finishes working.

We call in the Main method:

static void Main(string[] args)

{

AddOne();

AddOne();

AddOne();

AddOne();

AddOne();

Console.WriteLine(sum = + sum);

}

The result is definitely 5000000, indisputable.

However, this will be slower. What if it is executed simultaneously in multiple threads?

Alright, let’s modify the Main method as follows:

static void Main(string[] args)

{

for (int i = 0; i

The author ran it once, and sum = 2633938 appeared.

We stored the result of each computation in an array; upon extracting a portion, we find:

8757

8758

8760

8760

8760

8761

8762

8763

8764

8765

8766

8766

8768

8769

When multiple threads operate on the same variable, they are unaware that this variable has changed in other threads, leading to results that do not meet expectations upon completion.

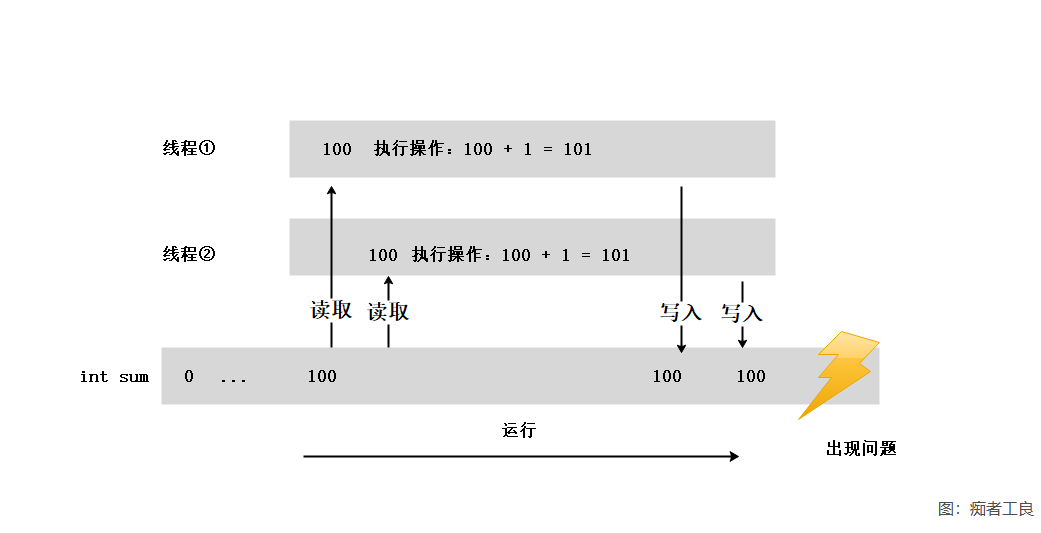

We can explain this with the following diagram:

Thus, atomic operations are required so that at any moment, only one thread can perform a certain operation. The aforementioned operations refer to the processes of reading, calculating, and writing.

Of course, we could use lock or Monitor to resolve this, but that would incur significant performance loss.

This is where Interlocked plays a role; for some simple operation calculations, Interlocked can achieve atomicity.

2. Interlocked.Increment

Used for increment operations.

We modify the AddOne method:

public static void AddOne()

{

for (int i = 0; i

Then run the code, and you will find the result sum = 5000000, which is correct.

This indicates that Interlocked can perform atomic operations on simple value types.

3. Interlocked.Exchange

Interlocked.Exchange() implements assignment operations.

This method has multiple overloads; let’s look at one of them:

public static int Exchange(ref int location1, int value);

It means to assign value to location1 and then return the value of location1 before the change.

Testing:

static void Main(string[] args)

{

int a = 1;

int b = 5;

// a before change is 1

int result1 = Interlocked.Exchange(ref a, 2);

Console.WriteLine($a's new value a = {a} | a's previous value result1 = {result1});

Console.WriteLine();

// a before change is 2, b is 5

int result2 = Interlocked.Exchange(ref a, b);

Console.WriteLine($a's new value a = {a} | b remains unchanged b = {b} | a's previous value result2 = {result2});

}

Additionally, Exchange() also has overloads for reference types:

Exchange<T>(T, T)

4. Interlocked.CompareExchange

One of the overloads:

public static int CompareExchange(ref int location1, int value, int comparand)

It compares two 32-bit signed integers for equality and, if they are equal, replaces the first value.

If the value of comparand is equal to the value in location1, then value is stored in location1. Otherwise, no operation is performed.

Be careful; it compares location1 and comparand!

An example of usage is as follows:

static void Main(string[] args)

{

int location1 = 1;

int value = 2;

int comparand = 3;

Console.WriteLine(Before execution:);

Console.WriteLine($ location1 = {location1} | value = {value} | comparand = {comparand});

Console.WriteLine(When location1 != comparand);

int result = Interlocked.CompareExchange(ref location1, value, comparand);

Console.WriteLine($ location1 = {location1} | value = {value} | comparand = {comparand} | location1 before change value {result});

Console.WriteLine(When location1 == comparand);

comparand = 1;

result = Interlocked.CompareExchange(ref location1, value, comparand);

Console.WriteLine($ location1 = {location1} | value = {value} | comparand = {comparand} | location1 before change value {result});

}

5. Interlocked.Add

Adds two 32-bit integers and replaces the first integer with the sum; this operation is done atomically.

public static int Add(ref int location1, int value);

This is effective only for int or long.

Back to the multithreaded summation problem in the first subsection, we can use Interlocked.Add() to replace Interlocked.Increment().

static void Main(string[] args)

{

for (int i = 0; i

6. Interlocked.Read

Returns a 64-bit value that is loaded atomically.

On 64-bit systems, the Read method is unnecessary since 64-bit read operations are already atomic. On 32-bit systems, 64-bit read operations are not atomic unless executed using Read.

public static long Read(ref long location);

This means it is only effective on 32-bit systems.

I haven’t found a specific scenario for this.

You can refer to: https://www.codenong.com/6139699/

It seems not very useful? Then I’ll skip it.

文章评论