- 说明

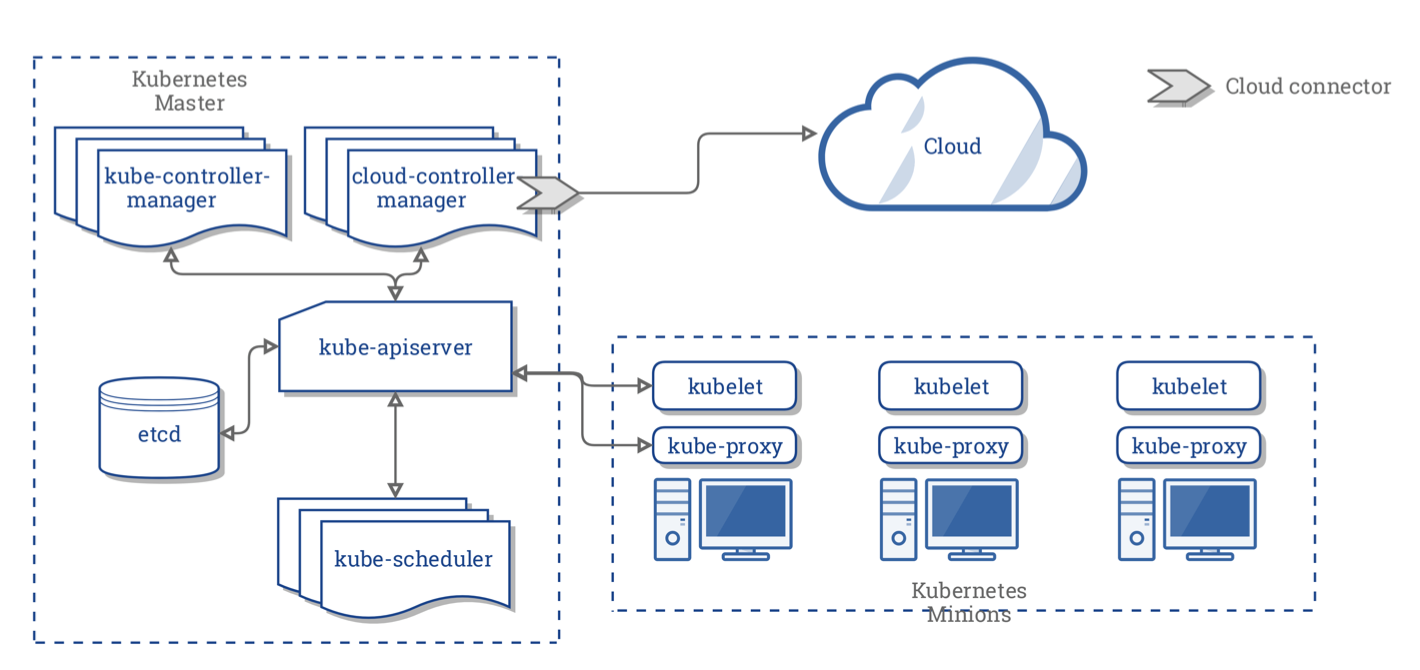

- Kubernetes集群的组成

- What are containerized applications?

- What are Kubernetes containers?

- What are Kubernetes pods?

- What is the difference between containers vs. pods?

- What are Kubernetes nodes?

- What is a Kubernetes Control Plane?

- What is a Kubernetes Cluster?

- What are Kubernetes volumes?

- How do the components of Kubernetes work together?

说明

This content is sourced from https://www.vmware.com/topics/glossary and translated. Please refer to the content policy at https://www.vmware.com/community_terms.html,内容复制、翻译请参考版权协议 http://creativecommons.org/licenses/by-nc/3.0/.

This article collects, translates, and organizes terminology from VMware's glossary, primarily introducing some terms and concepts related to the components of Kubernetes.

Kubernetes集群的组成

When we talk about Kubernetes and application deployment, we often involve concepts such as containers, nodes, pods, etc., along with various terms that can be overwhelming. To better understand Kubernetes, we will introduce the knowledge related to application deployment and execution in Kubernetes below.

A Kubernetes cluster consists of multiple components, hardware, and software that work together to manage the deployment and execution of containerized applications. The relevant concepts of these components are:

| 成分 | 名称 |

|---|---|

| Cluster | 集群 |

| Node | 节点 |

| Pod | 不翻译 |

| Container | 容器 |

| Containerzed Application | 容器化应用 |

The following content will introduce these components from the smallest to the largest granularity.

What are containerized applications?

Containerized applications refer to applications that are bundled into containers. We often say that we use images to package applications and deploy them using Docker. Therefore, when your application successfully runs on Docker, it is referred to as containerized applications.

Definition:

Containerized applications are bundled with their required libraries, binaries, and configuration files into a container.

容器化的应用程序与它们所需的库、二进制文件和配置文件绑定到一个容器中。

Of course, just because an application can be packaged into a container does not mean it can be touted as a product; not every application is an excellent candidate for containerization. For example, applications referred to as "big ball of mud" in DDD design are difficult to migrate and configure due to their complex design, high dependency, and instability, marking them as evidently unsuccessful products.

Through years of experience, many developers have summarized their findings into twelve guiding principles for cloud computing applications:

1. Codebase: One codebase tracked in revision control, many deploys

代码库: 一个代码库可以在版本控制和多份部署中被跟踪

2. Dependencies: Explicitly declare and isolate dependencies

依赖项: 显式声明和隔离依赖项

3. Config: Store config in the environment

配置: 在环境中存储配置

4. Backing services: Treat backing services as attached resources

支持服务: 将支持服务视为附加资源(可拓展,而不是做成大泥球)

5. Build, release, run: Strictly separate build and run stages

构建、发布、运行: 严格区分构建和运行阶段(连 Debug、Release 都没有区分的产品是真的垃圾)

6. Processes: Execute the app as one or more stateless processes

过程: 作为一个或多个无状态过程执行应用程序

7. Port binding: Export services via port binding

端口绑定: 可通过端口绑定服务对外提供服务

8. Concurrency: Scale out via the process model

并发性: 通过进程模型进行扩展

9. Disposability: Maximize robustness with fast startup and graceful shutdown

可处理性: 快速启动和完美关机,最大限度地增强健壮性

10. Dev/prod parity: Keep development, staging, and production as similar as possible

Dev/prod parity: 尽可能保持开发中、演示时和生产时的相似性

11. Logs: Treat logs as event streams

Logs: 将日志视为事件流

12. Admin processes: Run admin/management tasks as one-off processes

管理流程: 将管理/管理任务作为一次性流程运行

There may be inadequacies in the translation above, and readers can refer to the original text for understanding:

https://www.vmware.com/topics/glossary/content/components-kubernetes

Many popular programming languages and applications are containerized and stored in open-source repositories. However, building application containers using only the necessary libraries and binaries required to run the application is more efficient than importing all available resources. Creating containers can be done programmatically, enabling the establishment of continuous integration and deployment (CI/CD) pipelines for increased efficiency. Containerized applications fall into the realm of developers who need to master how to containerize applications.

What are Kubernetes containers?

Containers are standardized, self-contained execution enclosures for applications.

容器是应用的标准化、独立的执行外壳。

Typically, containers contain an application and the necessary libraries, files, etc., required for executing the binary program. For example, a Linux file system combined with the application forms a simple container. By restricting containers to a single process, diagnosing issues and updating applications become easier. Unlike VMs (virtual machines), containers do not include the underlying operating system, making them lightweight. Kubernetes containers belong to the realm of development.

What are Kubernetes pods?

A Pod is the smallest execution unit in a Kubernetes cluster. In Kubernetes, containers do not run directly on cluster nodes; instead, one or more containers are encapsulated within a Pod. All applications within a Pod share the same resources and local network, simplifying communication between applications in the Pod. Pods communicate with a proxy known as Kubelet and the Kubernetes API, along with the rest of the cluster, on each node. Although developers now require API access for complete cluster management, Pod management is transitioning into the realm of DevOps.

As the load on the Pod increases, Kubernetes can automatically replicate the Pod to achieve the desired scalability (deploying more Pods to provide the same service for load balancing). Hence, designing a Pod to be as lightweight as possible is important to minimize resource wastage during replication scaling and reduction.

Pods are perceived to be more of a specialization within the DevOps domain.

What is the difference between containers vs. pods?

Containers encapsulate the code (compiled binary executable) required to execute specific processes or functions. Before Kubernetes, organizations could run containers directly on physical or virtual servers but lacked the scalability and flexibility provided by Kubernetes clusters.

Pods provide an abstraction for containers, allowing one or more applications to be packaged together in a Pod, which is the smallest execution unit in a Kubernetes cluster. For instance, a Pod may contain init containers that prepare the environment for other applications before terminating them once the application begins execution. Pods are the smallest units that can be replicated in the cluster, where the containers within a Pod are scaled in unison.

If an application requires access to persistent storage, then pods also include persistent storage along with containers.

What are Kubernetes nodes?

Pods are the smallest execution units in Kubernetes, whereas Nodes are the smallest computing hardware units in Kubernetes. Nodes can be physical local servers or virtual machines.

Like containers, Nodes provide an abstraction layer. If the operational team considers a Node merely as a resource with processing power and memory, then each Node can be interchangeable with the next. Multiple Nodes work together to form a Kubernetes cluster, which automatically allocates workloads according to changing demands. If a node fails, it is automatically removed from the cluster, with other nodes taking over. Each node runs an agent called kubelet, which communicates with the cluster control plane.

Nodes are specialized fields for DevOps and IT.

What is the difference between Kubernetes pods vs. nodes?

Pods are an abstraction for executable code, while Nodes are an abstraction for computer hardware, making this comparison somewhat like comparing apples and oranges.

Pods are the smallest execution units of Kubernetes, composed of one or more containers;

Nodes are the physical servers or virtual machines that make up a Kubernetes cluster. Nodes are interchangeable and are typically not handled individually by users or IT unless maintenance is required.

What is a Kubernetes Control Plane?

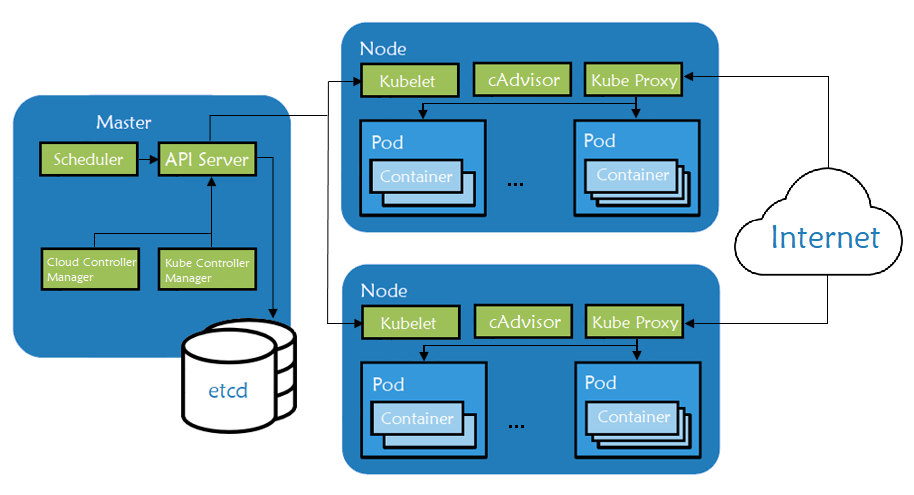

The Kubernetes control plane consists of the controllers for the Kubernetes cluster, mainly including apiserver, etcd, scheduler, and controller-manager.

This has been mentioned in the first part, and there's no need for further elaboration here.

What is a Kubernetes Cluster?

A Kubernetes cluster is made up of Nodes, which can be virtual machines or physical servers. When you use Kubernetes, most of the time you are managing the cluster. There must be at least one instance of the Kubernetes control plane running on a Node and at least one Pod to be run on it. Typically, when workloads change, there will be multiple nodes in the cluster to handle application changes.

What is the difference between Kubernetes Nodes vs. Clusters?

Nodes are the smallest elements in a cluster. A cluster is composed of Nodes. The cluster is a collective that shares the overall execution of Pods, reflecting in the original name of the Google Kubernetes cluster project: Borg.

What are Kubernetes volumes?

Since containers were initially designed to be ephemeral and stateless, the issue of storage persistence was rarely addressed. However, with increasing numbers of applications being containerized that require reading from and writing to persistent storage, the demand for access to persistent storage volumes has arisen.

To address this, Kubernetes has persistent volumes. What makes them unique is that they exist outside the cluster and can be mounted to the cluster without being associated with specific nodes, containers, or pods.

Persistent volumes can be local or cloud-based and fall under the domain of DevOps and IT.

In Docker, we can manage volumes using the following commands:

# Create a custom container volume

docker volume create {卷名称}# List all container volumes

docker volume ls# Inspect details of a specified container volume

docker volume inspect {卷名称}We can map the host directory when running a container using -v, or map the container volume into the container.

docker -itd ... -v /var/tmp:/opt/app ...

docker -itd ... -v {卷名}:/opt/app ...How do the components of Kubernetes work together?

In simple terms, initially, applications are created or migrated into containers and then run on Pods created by the Kubernetes cluster.

Once Pods are created, Kubernetes assigns them to one or more Nodes in the cluster and ensures that the correct number of running replicas exist on the Nodes. Kubernetes scans the cluster to ensure that each group of Containers operates as specified.

文章评论