Table of Contents

- Srevice

- Creation and Phenomenon of Service

- Service Definition

- Endpoint Slices

- Creating Endpoint and Service

- Service

- Creating Application

- Creating Endpoint

The deployment of a Deployment and Service has been introduced in A Brief Introduction to Kubernetes (8): External Access to the Cluster, and this article serves as a supplement, extensively discussing Service.

Article link: https://www.cnblogs.com/whuanle/p/14685430.html

Srevice

Service is an abstract method to expose applications running on a set of Pods as a network service. If we deploy Pods using Deployment, we can create a Service based on that Deployment.

In Kubernetes (K8S), each Pod has its own unique IP address, whereas Service can provide the same DNS name for multiple Pods (a group) and can load balance among them directly.

For example, in a group of nginx Pods, if nginx scales dynamically or for some reason the IP/port changes, then both the IP and port are not fixed, and it becomes challenging to determine the address of the newly scaled Pods. Moreover, if a Pod is deleted, the IP becomes unavailable.

Another scenario: if a group of Pods is referred to as the frontend, such as a web service, and another group of Pods is referred to as the backend, for example, MySQL, how can the frontend find and track the IP address it needs to connect to in order to use the backend portion of its workload?

This is precisely the problem that Service aims to solve. A Kubernetes Service defines an abstraction: a logical group of Pods, and a strategy for accessing them — typically referred to as microservices. When using Service to create a service for a group of Pods (created with Deployment), it doesn't matter how many Pod replicas we create or how they change; the frontend doesn't need to care which backend replica it is calling, nor does it need to know the status of backend Pods or track them. Service abstracts this association between the frontend and backend, decoupling them.

Creation and Phenomenon of Service

Let's quickly create Pods using the command below; the Pods will be deployed and run on various nodes.

kubectl create deployment nginx --image=nginx:latest --replicas=3

kubectl expose deployment nginx --type=LoadBalancer --port=80

Then execute the command to view Services:

kubectl get services

This indicates that the external access port is 30424.

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 3d6h

nginx LoadBalancer 10.101.132.236 <pending> 80:30424/TCP 39s

At this point, we can access this Service via the public network and port.

We can view the YAML file of this Service:

kubectl get service nginx -o yaml

apiVersion: v1

kind: Service

metadata:

creationTimestamp: "2021-04-23T07:40:35Z"

labels:

app: nginx

name: nginx

namespace: default

resourceVersion: "369738"

uid: 8dc49805-2fc8-4407-adc0-8e31dc24fa79

spec:

clusterIP: 10.101.132.236

clusterIPs:

- 10.101.132.236

externalTrafficPolicy: Cluster

ipFamilies:

- IPv4

ipFamilyPolicy: SingleStack

ports:

- nodePort: 30424

port: 80

protocol: TCP

targetPort: 80

selector:

app: nginx

sessionAffinity: None

type: LoadBalancer

status:

loadBalancer: {}

With a standard YAML file template, we can easily modify and customize a Service.

Let's view the Pods created through Deployment:

kubectl get pods -o wide

NAME IP NODE NOMINATED NODE READINESS GATES

nginx-55649fd747-9fzlr 192.168.56.56 instance-2 <none> <none>

nginx-55649fd747-ckhrw 192.168.56.57 instance-2 <none> <none>

nginx-55649fd747-ldzkf 192.168.23.138 instance-1 <none> <none>

Note: The Pods run on different nodes.

When we access through the external network, the Service will automatically provide us with one of the Pods, but this process is quite complex; here we will just present the surface phenomena.

------------

| |

--- Public IP --> | pod1 |

| pod2 |

| pod3 |

------------

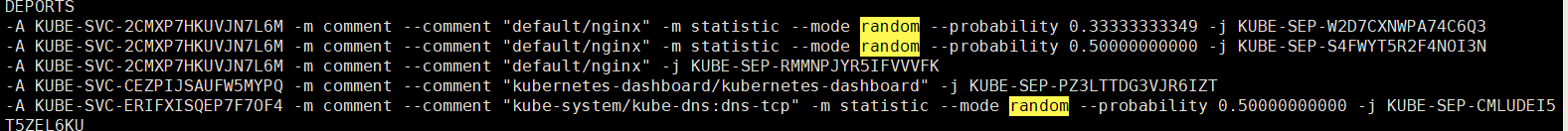

Then, we will check the iptables configuration with the command:

iptables-save

Then search for the keyword random:

You will see three default/nginx, and the first Pod has a chance of access of 0.33333..., and then with a probability of 2/3, out of 2/3, there's a 0.5 chance of selecting the second Pod. The remaining 1/3 chance selects the third Pod.

If you want to access a Pod, you can do so by accessing the IP of any node that has nginx Pods. Since the master cannot run Pods, accessing through the master's IP is not possible.

Of course, it isn't strictly 0.33333..; the rules of iptables are a bit complicated, and it's difficult to explain fully here. We just need to know that the external network can access the Service, and the Service forwards traffic to us via iptables. Even if the Pods deployed by Deployment are not on the same node, services like K8S's DNS will handle it correctly, and we won't need to manually configure these networks.

[Image source: k8s official website]

Service Definition

In the previous section, we introduced the method to create a Service (kubectl expose ...) and also discussed its dependency on iptables. Here we will continue to learn the method to define a Service.

Previously, we operated directly through Deployment, uniformly mapping Pods (replicas) for a Deployment. Of course, we can also perform network mapping for different Pods.

apiVersion: v1

kind: Service

metadata:

name: my-service

spec:

selector:

app: MyApp

ports:

- protocol: TCP

port: 6666

targetPort: 80

In this YAML, we used the selector, which generally contains the app label, indicating the name of the Pod (usually the image name). The port and targetPort correspond to the port of the Pod and the port exposed for external access, respectively.

When we don't use Deployment or job objects for the Pods, we can use the selector to choose appropriate Pods.

Service can map a receiving container or Pod port, targetPort, to any external port port that can be accessed from outside. If you use kubectl expose to map ports, it will by default randomly provide a 30xxx port. In contrast, when using YAML, the targetPort will, by default, be set to the same value as the port field.

Endpoint Slices

"Endpoint Slices provide a simple way to track network endpoints in a Kubernetes cluster. They offer a scalable and extensible alternative to Endpoints."

In Kubernetes, an EndpointSlice contains a reference to a group of network endpoints. After specifying a selector, the control plane will automatically create Endpoints for Kubernetes Services that utilize the specified selection operator.

This means that creating a Service (with selection operator) will automatically create an Endpoint.

Let's check the endpoints in the default namespace:

kubectl get endpoints

NAME ENDPOINTS AGE

kubernetes 10.170.0.2:6443 3d7h

nginx 192.168.56.24:80,192.168.56.25:80,192.168.56.26:80 59m

These are the IPs and ports of the Pods, meaning that through Endpoints, tracking network endpoints within the Kubernetes cluster becomes more arbitrary. However, such an explanation can be hard to understand. The author had to refer to many resources and troubleshoot many times to grasp these concepts. Next, we will proceed step by step in practical operations and gradually understand the meanings behind these operations.

Creating Endpoint and Service

Next, we will manually create the Service and Endpoint. We will create the Service first and then the Endpoint (the order of creating these can be arbitrary).

Service

First, let's delete the previously created Service.

kubectl delete service nginx

Write the contents into a service.yaml file as follows:

apiVersion: v1

kind: Service

metadata:

name: nginx

spec:

ports:

- protocol: TCP

port: 6666

targetPort: 80

Apply this Service:

kubectl apply -f service.yaml

Check the service:

kubectl get services

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 3d12h

nginx ClusterIP 10.98.113.242 <none> 6666/TCP 6s

Since this Service does not map any Pods, it is currently of no use. However, it already provides developers with a clarification, or rather confirming the Service port and address for nginx. As for the actual nginx service, we will determine that later. The order of creating Service and Endpoint is arbitrary; here we proposed the abstraction of first agreeing on the port and then providing the service, hence creating Service first.

Creating Application

We can randomly choose a worker or master node to create an nginx container:

docker run -itd -p 80:80 nginx:latest

Why create a container directly instead of a Pod? Because we are in the development stage. If we change nginx to MySQL, how do we debug it? Test our database? Simulate data? We will create applications through Deployment in production, but for now, we can utilize our own database or local applications.

The official documentation states:

- We want to use an external database cluster in production, but our own database in the testing environment.

- We want services to point to services in another namespace or clusters.

- You are migrating workloads to Kubernetes, and while assessing this approach, you are only running part of the backend on Kubernetes.

In summary, we have created a Service, which provides the abstraction. As for how to deliver this service, we can use a Pod, directly execute binaries on machines via command, or use Docker. Moreover, MySQL may be provided as an external service, or MySQL could be directly deployed on the host without using containers or Pods. We can track the MySQL service's port via the Endpoint.

Next, we query the IP of this container:

docker inspect {container_id} | grep IPAddress

The author obtained: "IPAddress": "172.17.0.2", so let's try curl 172.17.0.2 to see if we can access nginx, and if it works, we can proceed to the next step.

Creating Endpoint

Create an endpoint.yaml file with the following content (note to replace the IP with your container's access IP):

apiVersion: v1

kind: Endpoints

metadata:

name: nginx

subsets:

- addresses:

- ip: 172.17.0.2

ports:

- port: 80

Then apply the YAML:

kubectl apply -f endpoint.yaml

Check the endpoint:

kubectl get endpoints

# cannot be filled as endpoint

Then access the Service's IP:

curl 10.99.142.24:6666

You can also access this IP via the public network.

If the Endpoint needs to track multiple IPs (multiple Pods or containers or applications), you can use:

- addresses:

- ip: 172.17.0.2

- ip: 172.17.0.3

- ip: 172.17.0.4

... ...

文章评论