本教程已加入 Istio 系列:https://istio.whuanle.cn

1. Overview of Istio

🚩 Let's Talk About Microservices Design

It seems that using Kubernetes means you have a microservice system.

I've encountered many people or companies blindly worshiping Kubernetes, constantly shouting about adopting Kubernetes, yet they have neither the technical reserves nor a planning scheme. They think that after adopting Kubernetes, they will transform into a distributed, high-performance, high-end microservice system.

From experience, many companies do not significantly improve the shortcomings of their old systems after adopting Kubernetes. Due to the presence of large amounts of muddy, chaotic code, arbitrary use of database transactions, functions with hundreds of lines of code, and excessive references to other projects' interfaces, no matter how many application instances you place on Kubernetes, the speed still doesn't pick up.

This situation is quite common, such as your company's project and my company's project.

In my experience, many small and medium-sized companies start transforming from monolithic systems to those using Kubernetes infrastructure; however, most of their usage of Kubernetes is superficial. Here are some common scenarios.

🥇 Only Using Kubernetes to Deploy Containers

Only using Kubernetes to deploy containers, with almost no other changes.

Such systems integrate Jenkins to build CI/CD containers, which are then deployed to Kubernetes. However, they lack considerations for fault tolerance, communication between internal and external systems, do not use observability systems (monitoring, logging, tracing), and have no service discovery or load balancing. The entire system is merely split into multiple services for deployment, with communication between services configured with hard-coded fixed addresses, making it troublesome to modify configurations.

The only highlights of such systems are the use of Kubernetes, possibly alongside data storage systems like Redis or MongoDB.

However, due to the lack of reasonable architectural design, although the system has been split, the benefits it brings are merely that the development teams can take on a project independently, making management of development work easier.

To cope with the need for communications among sub-services, they can only continue to add a large set of APIs in user centers or other services so that sub-services can acquire the necessary information. However, the drawbacks of this split are numerous; service splitting leads to data isolation (usually using different database names with the same database engine), each service having its own database, often neglecting issues like data consistency and distributed transactions that may arise in a distributed setup.

Due to a lack of sufficient infrastructure support, troubleshooting service communication issues can become extremely difficult, requiring project teams to coordinate with different groups, potentially modifying code multiple times for a single issue, continuously redeploying and appending logs to locate and fix bugs.

🥈 Starting to Use Some Middleware to Improve Infrastructure

To solve some issues in the microservice system, development teams introduced some middleware.

Data Synchronization: To address data isolation issues, tools like Canal for database synchronization are introduced. For example, synchronizing the user table from the user center to downstream services enables these services to directly join data in their own databases, making data aggregation easier. For easier query of aggregated data, MySQL data is consumed and synchronized to ES, Kafka, and other systems for secondary processing.

Automated Deployment and Continuous Integration: The deployment of microservices is automated to reduce manual intervention and errors.

Monitoring and Logging: Distributed monitoring and logging are used within the cluster for easier tracking and diagnosing of problems. 【Istio can help you】

Service Discovery and Load Balancing: Middleware such as Consul is used for service registration and discovery, solving the problem of coupling service communication address configurations that need to be manually maintained, additionally offering health checks, load balancing, and other features. 【Istio can help you】

Configuration Management: Configuration centers like Nacos and Apollo are used to dynamically change service configurations.

Unreasonable Service Partitioning: In microservice architecture, it is crucial to split the system into multiple independent services, but unreasonable partitioning can lead to excessive splitting of services or functional coupling.

Data Consistency: Independent data storage across microservices could lead to data consistency issues.

High Coupling: Excessive coupling between services may result in changes to one service affecting others, reducing the maintainability of the system.

Difficulties in Diagnosis and Monitoring: Due to the distributed nature of microservices, diagnosing and monitoring issues can be difficult. 【Istio can help you】

Security Issues: Communication between microservices could lead to security concerns, with most systems not considering external and internal communication authentication issues. 【Istio can help you】

Such systems may solve some problems occurring in service communication and failures while providing middleware to alleviate the burdens on developers.

However, many problems may still remain. I'll discuss some real-life examples I have encountered.

Firstly, code is coupled with too many components. For example, to support tracing and log collection, many related libraries are referenced in the code, requiring complex configurations.

These components can actually be relegated to the infrastructure, which is precisely why I am writing this Istio tutorial.

The ABP framework in .NET may be one of the criticized subjects, as ABP is really too "heavy." Consider how many components ABP has; each module requires adding code and configurations to use it. Just the configuration of these modules and extending services can lead to a significant amount of code, resulting in high learning costs.

Think about it: with so many modules and configurations in the project, how much can one remember? Every time a new project is created, a bunch of configurations and codes have to be copied from other projects, which is exhausting.

Additionally, microservices communicate over the network, and the lack of a good fault handling solution is also common. Microservice communication can experience network latency and faults, requiring facilities to implement timeout, retry, and circuit breaker patterns to mitigate the impact of network issues.

In practical situations, most development teams do not manage these issues properly. A sub-service sends an HTTP request directly; if the request fails, it directly throws an exception, or they may add components in the code to automatically handle fault occurrences, such as retries or circuit breaking.

For example, in C#, one can use the Polly component to configure timeout, retries, etc., for HTTP requests. However, these configurations are hardcoded in the code; if changes are needed, code modifications are required, and developers may not always preset optimal parameters. Adding another component increases the "weight" of the program and exacerbates maintenance difficulties.

Thus, designing a microservice system is not easy.

❓ Why Should I Learn Istio

Firstly, Istio can move many configurations to the infrastructure layer, significantly reducing the components, code, and configurations in our project.

As the saying goes, architectural design determines the upper limit of a system, while implementation details determine its lower limit.

Think about it: if you use ABP to develop business logic, how well do you remember the configurations when you open the project code? Every time you create a new project, you have to copy a bunch of code and configurations from an old project, and significantly increase configurations across various middleware. I have seen several projects developed using ABP, and the configurations are overwhelming. Moreover, it involves many dependencies, and it is easy to encounter conflicts caused by inconsistent component versions when different project modules are updated.

As mentioned earlier, microservice communications require automatic fault recovery, automatic retries, and circuit breakers, as well as support for health checks and load balancing. If these are all configured in the code, every project has to repeat this labor.

Therefore, my primary reasons for learning Istio are threefold:

- To put things from the code into infrastructure, reducing the amount of code and complex configurations.

- To learn many microservice design concepts.

- To design system architecture more reasonably on Kubernetes.

Of course, depending on the size of the company and the demands of business support, it may not necessarily need Istio. Moreover, Istio itself is quite heavy, and the learning difficulty is not less than that of Kubernetes.

Before writing this article, I chatted with an expert who said that most companies do not need Istio, while those that do will choose to develop their own. In fact, whether or not to use it isn’t the most important thing; the crucial aspect is that you can gain insights into many design philosophies while learning Istio, as well as understanding the problems that these Istio components solve. Then, in addition to using Istio, we can also use other methods to tackle these issues.

💡 So, What is Istio

Istio is a service mesh closely integrated with Kubernetes, used for service governance.

Note that Istio is used for service governance, mainly focusing on traffic management, inter-service security, and observability. In microservice systems, many challenging issues arise, and Istio can only address a small portion of these.

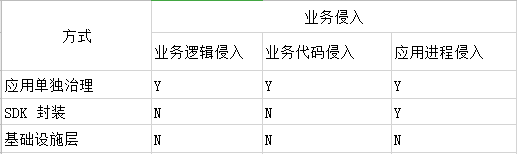

Service governance can be approached in three ways: the first method involves having separate governance logic included in each project, which is comparatively straightforward; the second method encapsulates the logic into an SDK that each project references, requiring little to no additional configurations or code; and the third method is to integrate governance into the infrastructure layer. Istio represents the third method.

Istio offers a non-intrusive approach to business; it completely avoids the need to modify project code.

Three Main Functions of Istio

Next, let's introduce the three major capabilities of Istio.

⏩ Traffic Management

Traffic management includes the following functionalities:

- Dynamic service discovery

- Load balancing

- TLS termination

- HTTP/2 and gRPC proxying

- Circuit breakers

- Health checks

- Staged rollouts based on percentage traffic split

- Fault injection

- Rich metrics

⏩ Observability

Istio supports link tracing middleware like Jaeger, Zipkin, and Skywalking, and it supports Prometheus for collecting metrics data. The logging functions are not particularly notable, simply recording HTTP addresses of requests, among others. Istio's observability helps us understand application performance and behavior, making fault detection, performance analysis, and capacity planning much simpler.

⏩ Security Capabilities

The main feature is to enable encrypted communication between services in a zero-trust network. Istio enhances security by automatically providing mutual TLS encryption for service communications. Additionally, Istio offers robust identity, authorization, and auditing capabilities.

Principle of Istio

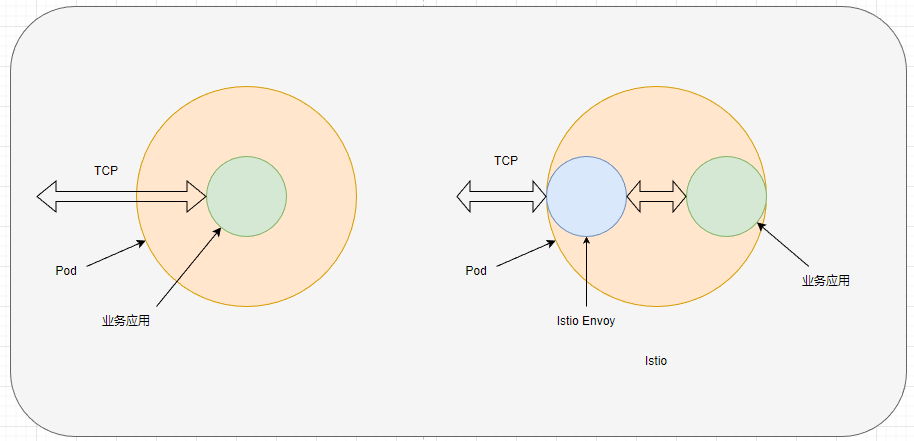

The principle of Istio's operation involves intercepting events from Kubernetes deployed Pods and injecting a container named Envoy into those Pods. This container intercepts traffic aimed at business applications. Since all traffic is "hijacked" by Envoy, Istio can analyze traffic such as collecting request information and perform a series of traffic management operations. Additionally, it can validate authorization information. Once Envoy intercepts traffic and executes a series of operations, if the request is successful, it forwards the traffic to the business application's Pods.

Of course, because Envoy needs to intercept and forward traffic to the business application, this introduces an additional forwarding layer, which could result in slightly increased system response times; however, the added response time is almost negligible.

Each Pod has an Envoy responsible for intercepting, processing, and forwarding all network traffic in and out of the Pod, a method known as Sidecar.

Here are some of the main functions of the Istio Sidecar:

-

Traffic Management: The Envoy proxy can implement traffic forwarding, splitting, and mirroring based on routing rules (such as VirtualService and DestinationRule) configured in Istio.

-

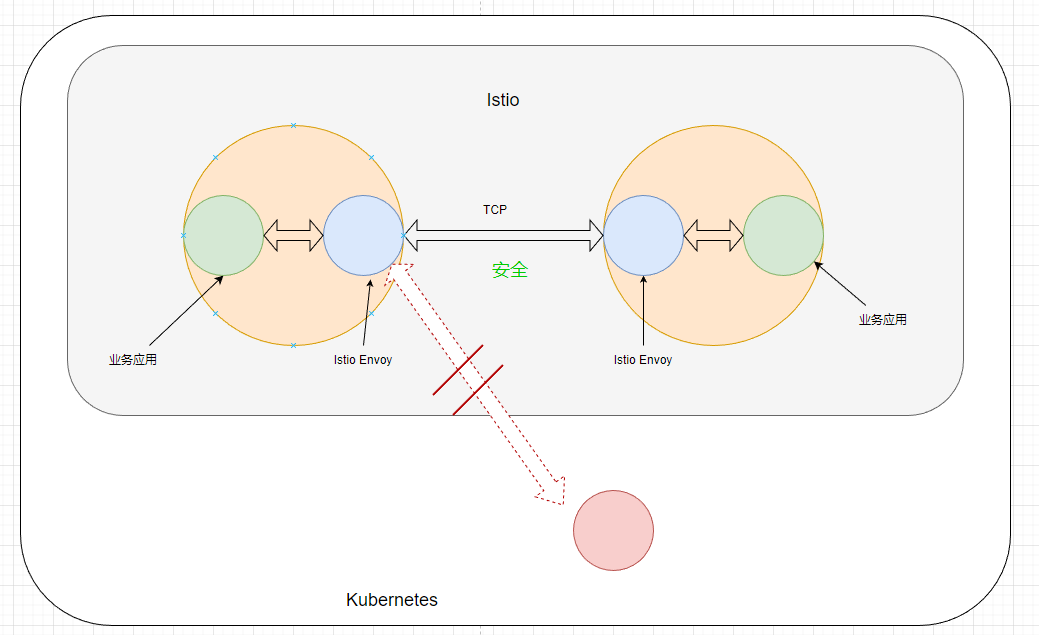

Secure Communication: The Envoy proxy is responsible for establishing secure mutual TLS connections between services, ensuring the security of inter-service communication.

-

Telemetry Data Collection: The Envoy proxy can collect detailed telemetry data regarding network traffic (such as latency, success rates, etc.) and report this data to Istio's telemetry components for monitoring and analysis.

-

Policy Enforcement: The Envoy proxy can execute rate limiting, access control, and other policies according to the rules configured in Istio (like RateLimit and AuthorizationPolicy).

As Pods expose ports through Envoy, all ingress and egress traffic must undergo Envoy's scrutiny. This makes it easy to determine the source of requests. If the requester is not a service in Istio, then Envoy will refuse access.

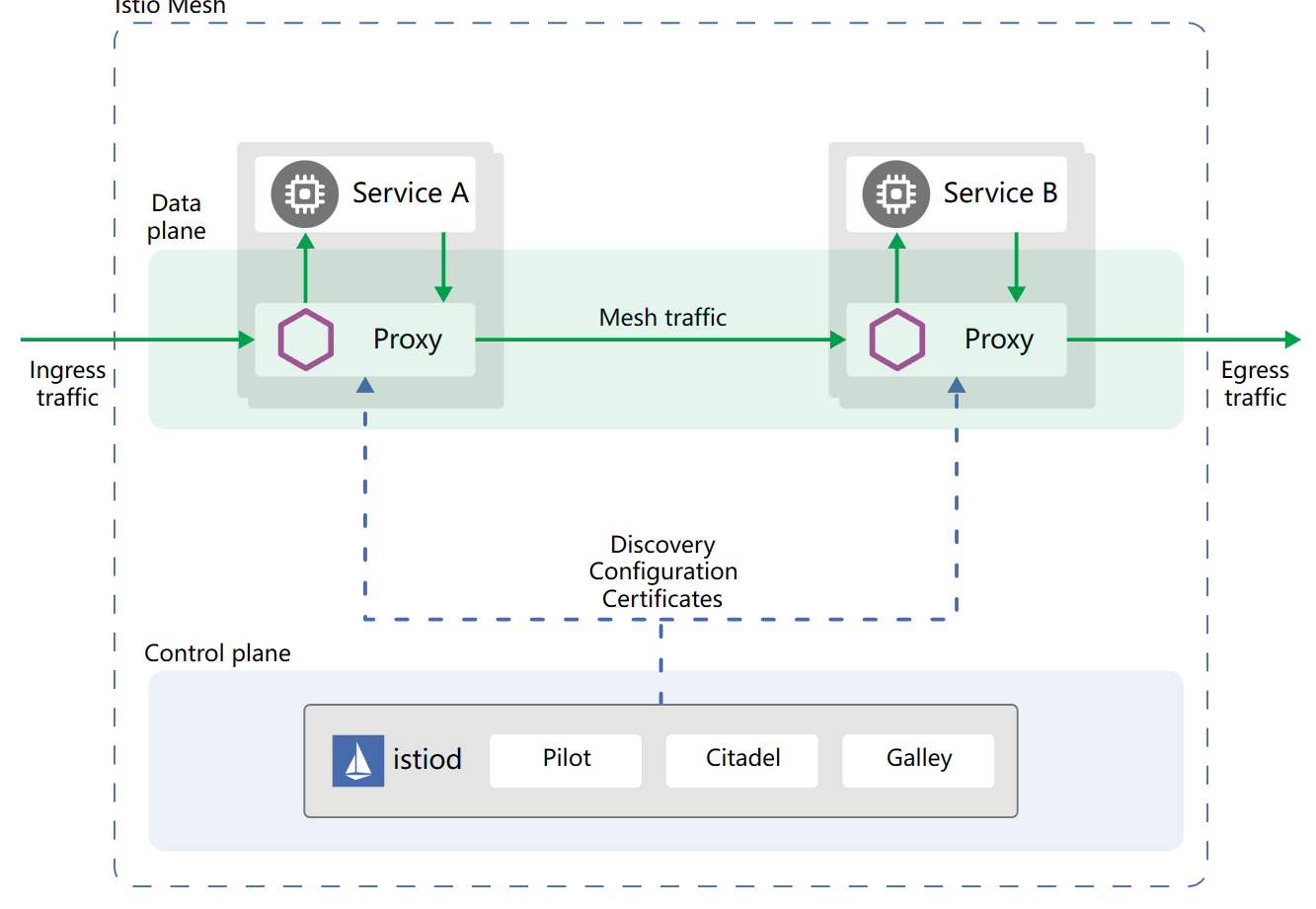

In Istio, the Envoy is referred to as the data plane, while the component responsible for managing the cluster is called istiod, which acts as the control plane.

Note that istiod is a component responsible for managing the cluster in Istio.

That's all for the introduction to Istio. In the upcoming chapters, we will delve deeper into Istio's functionality and principles through practical usage.

文章评论