Adding Parameters in Conversation

Use CreateFunctionFromPrompt() to create a KernelFunction instance specified by the prompt template.

A KernelFunction represents a function that can be called as part of a semantic kernel workload. The call to the LLM in the Semantic Kernel is referred to as a KernelFunction. KernelFunctions are divided into two types: Semantic Function and Native Function.

private readonly IKernelBuilder _kernelBuilder = Kernel.CreateBuilder();

_kernelBuilder.AddAzureOpenAIChatCompletion(

deploymentName: "aaa",

endpoint: "https://1.openai.azure.com",

apiKey: "1111111");

var kernel = _kernelBuilder.Build();

string translationPrompt = "Translate this sentence into English\r\n{{$input}}";

var llm = kernel.CreateFunctionFromPrompt(translationPrompt);

KernelArguments args = new KernelArguments();

args.Add("input", input);

var response = await llm.InvokeAsync(kernel, args);

return response.GetValue<string>();

Then, use KernelArguments to replace the $input parameter in the template with the specific content. For input “我爱学习”, the result is:

The English translation is "I love studying."

Calling Plugin Functions

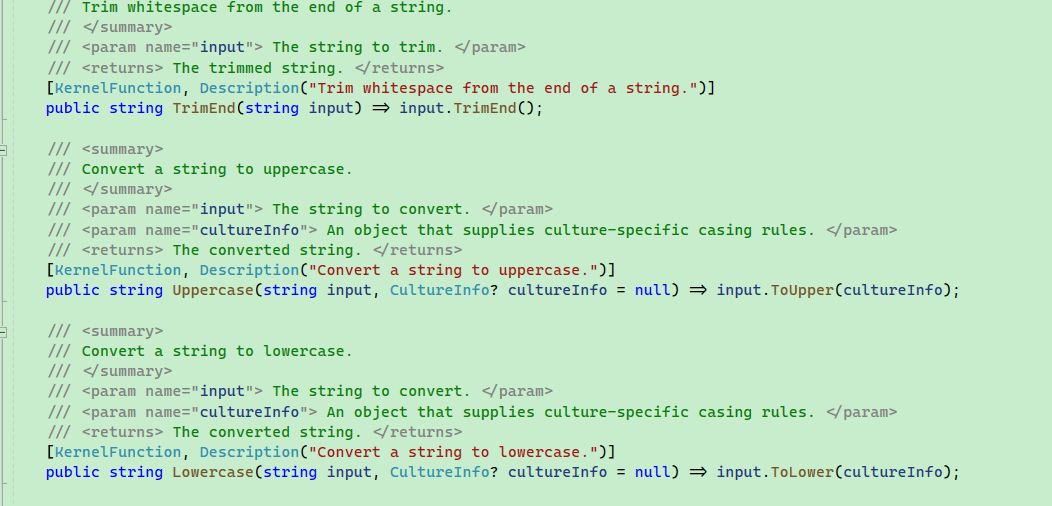

TextPlugin is a plugin class that contains some functions:

[HttpGet("/get")]

public async Task<string> Get(string input)

{

var kernel = _kernelBuilder.Build();

var textPlugin = kernel.ImportPluginFromType<TextPlugin>();

// Function parameters

var arguments = new KernelArguments()

{

["input"] = input

};

// textPlugin["Uppercase"] indicates the function name to call

string? resultValue = await kernel.InvokeAsync<string>(textPlugin["Uppercase"], arguments);

Console.WriteLine($"string -> {resultValue}");

return "";

}

}

Conversation Configuration

For code reference visit http://studiogpt.cn/web/#/620853576/285421664

Set up the chat background and configure the maximum tokens for the chat, temperature, and other settings:

var kernel = _kernelBuilder.Build();

string promptTemplate = @"

Create a creative reason or excuse for the given event.

Be creative and humorous. Let your imagination run wild.

Event: I am going to be late.

Excuse: I was held up for ransom by giraffe bandits.

Event: I haven't been to the gym in a year.

Excuse: I have been busy training my pet dragon.

Event: {{$input}}

";

var excuseFunction = kernel.CreateFunctionFromPrompt(promptTemplate, new OpenAIPromptExecutionSettings() { MaxTokens = 100, Temperature = 0.4, TopP = 1 });

Then, engage in a normal conversation and insert parameters:

var result = await kernel.InvokeAsync(excuseFunction, new() { ["input"] = "I missed the F1 finals" });

Console.WriteLine(result.GetValue<string>());

result = await kernel.InvokeAsync(excuseFunction, new() { ["input"] = "Sorry, I forgot your birthday" });

Console.WriteLine(result.GetValue<string>());

var fixedFunction = kernel.CreateFunctionFromPrompt($"Convert this date {DateTimeOffset.Now:f} to French format", new OpenAIPromptExecutionSettings() { MaxTokens = 100 });

result = await kernel.InvokeAsync(fixedFunction);

Console.WriteLine(result.GetValue<string>());

文章评论