教程名称:Getting Started with Deep Learning Using C#

作者:whuanle

地址:

1.2 Basic of Pytorch

This section introduces the basic API of Pytorch, primarily focusing on how to create and manipulate arrays. Since the content is quite similar to Numpy and Numpy types can be converted to torch.Tensor, readers interested in Numpy can refer to the author's other articles:

- Introduction to Numpy Framework in Python

https://www.whuanle.cn/archives/21461

https://www.cnblogs.com/whuanle/p/17855578.html

Tip: When learning this article, having a sufficient understanding of linear algebra will enhance learning outcomes. However, not having a foundation in linear algebra is okay; it will be covered later. This article will provide examples in both Python and C# to help readers contrast the differences, and subsequent chapters will primarily use C# for examples.

Basic Usage

Since many numerical values in neural networks exist in the form of vectors or arrays, which are not as straightforward as numerical types in everyday programming, printing numerical information serves as a means for learning or debugging programs. Below, let's observe how the program prints complex data types in Pytorch.

Printing

We will create an array from 0 to 9 using Pytorch and then print the array.

Python:

import torch

x = torch.arange(10)

print(x)

tensor([0, 1, 2, 3, 4, 5, 6, 7, 8, 9])

In C#, we use the official library instead of Console.WriteLine().

using TorchSharp;

var x = torch.arange(10);

x.print(style:TensorStringStyle.Default);

x.print(style:TensorStringStyle.Numpy);

x.print(style:TensorStringStyle.Metadata);

x.print(style:TensorStringStyle.Julia);

x.print(style:TensorStringStyle.CSharp);

[10], type = Int64, device = cpu 0 1 2 3 4 5 6 7 8 9

[0, 1, 2, ... 7, 8, 9]

[10], type = Int64, device = cpu

[10], type = Int64, device = cpu 0 1 2 3 4 5 6 7 8 9

[10], type = Int64, device = cpu, value = long [] {0L, 1L, 2L, ... 7L, 8L, 9L}

The result printed in Python is relatively straightforward to understand, while the default printing method in C# is less visually appealing. Generally, the visualization uses the TensorStringStyle.Numpy enumeration.

When printing values in C#, there is a parameter string? fltFormat = "g5", which indicates precision, i.e., the number of decimal places to print.

The Maomi.Torch package provides some extension methods, allowing readers to use x.print_numpy() to directly print the corresponding style information.

For subsequent sections, it is assumed that the Python torch package name and C# TorchSharp namespace are imported; future code examples may omit the import statements, and readers should import them independently.

Basic Data Types

Pytorch's data types are different from the basic types in our programming languages; readers should pay attention to these differences.

For a detailed official documentation reference, see:

https://pytorch.org/docs/stable/tensor_attributes.html

https://pytorch.ac.cn/docs/stable/tensor_attributes.html

Pytorch creates data types represented by torch.Tensor, which is the fundamental structure to handle various data in machine learning models, including scalars, vectors, matrices, and higher-dimensional tensors. If the author has understood correctly, the Tensor objects created in Pytorch are called tensors. Developers can create Tensors in Pytorch from various forms of data.

Data types created in Pytorch are all represented using Tensor objects.

It is recommended to revisit this understanding after reading this article.

PyTorch has twelve different data types, as listed below:

| 数据类型 | dtype |

| ------------------- | ------------------------------------- |

| 32 位浮点数 | torch.float32 或 torch.float |

| 64 位浮点数 | torch.float64 或 torch.double |

| 64 位复数 | torch.complex64 或 torch.cfloat |

| 128 位复数 | torch.complex128 或 torch.cdouble |

| 16 位浮点数 | torch.float16 或 torch.half |

| 16 位浮点数 | torch.bfloat16 |

| 8 位整数(无符号) | torch.uint8 |

| 8 位整数(有符号) | torch.int8 |

| 16 位整数(有符号) | torch.int16 或 torch.short |

| 32 位整数(有符号) | torch.int32 或 torch.int |

| 64 位整数(有符号) | torch.int64 或 torch.long |

| 布尔值 | torch.bool |

Below is an example of creating an array filled with 1 while specifying the type.

Python:

float_tensor = torch.ones(1, dtype=torch.float)

double_tensor = torch.ones(1, dtype=torch.double)

complex_float_tensor = torch.ones(1, dtype=torch.complex64)

complex_double_tensor = torch.ones(1, dtype=torch.complex128)

int_tensor = torch.ones(1, dtype=torch.int)

long_tensor = torch.ones(1, dtype=torch.long)

uint_tensor = torch.ones(1, dtype=torch.uint8)

C#:

var float_tensor = torch.ones(1, dtype: torch.float32);

var double_tensor = torch.ones(1, dtype: torch.float64);

var complex_float_tensor = torch.ones(1, dtype: torch.complex64);

var complex_double_tensor = torch.ones(1, dtype: torch.complex128);

var int_tensor = torch.ones(1, dtype: torch.int32); ;

var long_tensor = torch.ones(1, dtype: torch.int64);

var uint_tensor = torch.ones(1, dtype: torch.uint8);

In C#, the torch.ScalarType enumeration represents Pytorch's data types, so there are two ways to specify the data type.

For example:

var arr = torch.zeros(3,3,3, torch.ScalarType.Float32);

arr.print_numpy();

or:

var arr = torch.zeros(3,3,3, torch.float32);

arr.print_numpy();

CPU or GPU Computation

We know that AI models can run on both CPU and GPU. Pytorch data can do the same; you can bind the device when creating the data type, and it will use the corresponding device for computation.

Generally,

cpuis used for CPU, whilecudaorcuda:{GPU index}is used for GPU.

Here is the code to determine whether Pytorch is currently running on GPU or CPU.

Python:

print(torch.get_default_device())

C#:

Console.WriteLine(torch.get_default_device())

If the current device supports GPU, Pytorch will start using it; otherwise, it will start with CPU. You can specify which GPU to use with torch.device('cuda') or torch.device('cuda:0').

Python:

if torch.cuda.is_available():

print("当前设备支持 GPU")

device = torch.device('cuda')

# 使用 GPU 启动

torch.set_default_device(device)

current_device = torch.cuda.current_device()

print(f"绑定的 GPU 为:{current_device}")

else:

# 不支持 GPU,使用 CPU 启动

device = torch.device('cpu')

torch.set_default_device(device)

default_device = torch.get_default_device()

print(f"当前正在使用 {default_device}")

C#:

if (torch.cuda.is_available())

{

Console.WriteLine("当前设备支持 GPU");

var device = torch.device("cuda", index:0);

// 使用 GPU 启动

torch.set_default_device(device);

}

else

{

var device = torch.device("cpu");

// 使用 CPU 启动

torch.set_default_device(device);

Console.WriteLine("当前正在使用 CPU");

}

var default_device = torch.get_default_device();

Console.WriteLine($"当前正在使用 {default_device}");

C# does not have

torch.cuda.current_device(), so it is recommended to set the index parameter to specify which GPU to use.

Additionally, you can get the number of available GPUs using torch.cuda.device_count(), but this will not be covered here.

Pytorch also supports setting which CPU or GPU to use based on individual data types, and allows for mixed computations between the two, but this will not be covered here.

Tensor Types

In Pytorch, scalar, array, and other types can be converted into Tensor type, where the data structure represented by Tensor is called a tensor.

x = torch.tensor(3.0);

Basic Arrays

Pytorch uses the asarray() function to convert obj values into arrays, defined as follows:

torch.asarray(obj, *, dtype=None, device=None, copy=None, requires_grad=False) → Tensor

Official API Documentation: https://pytorch.org/docs/stable/generated/torch.asarray.html#torch-asarray

obj can be one of the following:

- a tensor

- a NumPy array or a NumPy scalar

- a DLPack capsule

- an object that implements Python’s buffer protocol

- a scalar

- a sequence of scalars

The author will not translate parts that are not relevant or needed in this article.

For example, we can pass a normal array type and convert it into a Pytorch array type.

Python:

arr = torch.asarray([1,2,3,4,5,6], dtype=torch.float)

print(arr)

C#:

var arr = torch.from_array(new float[] { 1, 2, 3, 4, 5 });

arr.print(style: TensorStringStyle.Numpy);

Please note that the version differences between the two languages are quite large.

As mentioned earlier, you can set individual data types to use either CPU or GPU.

device = torch.device("cuda",index=0)

arr = torch.asarray(obj=[1,2,3,4,5,6], dtype=torch.float, device=device)

print(arr)

To convert a data type to use the CPU device:

device = torch.device("cuda",index=0)

arr = torch.asarray(obj=[1,2,3,4,5,6], dtype=torch.float, device=device)

arr = arr.cpu()

print(arr)

However, converting data between GPU and CPU incurs certain performance overhead.

Generating Arrays

torch.zeros

Used to create an array where all elements are 0; the array size can be specified. Its definition is as follows:

torch.zeros(*size, *, out=None, dtype=None, layout=torch.strided, device=None, requires_grad=False) → Tensor

Python:

arr = torch.zeros(10, dtype=torch.float)

print(arr)

C#:

var arr = torch.zeros(10);

arr.print(style: TensorStringStyle.Numpy);

Additionally, you can specify the dimensions of the generated array, for example, to create a 2*3 multidimensional array.

var arr = torch.zeros(2,3, torch.float32);

arr.print(style: TensorStringStyle.Numpy);

The code is in C#.

Printed result:

[[0, 0, 0] [0, 0, 0]]

We can also generate arrays with multiple dimensions, for example, generating a 3*3*3 array:

var arr = torch.zeros(3,3,3, torch.float32);

arr.print(style: TensorStringStyle.Numpy);

For easier understanding, the printed output is formatted below:

[

[[0, 0, 0] [0, 0, 0] [0, 0, 0]]

[[0, 0, 0] [0, 0, 0] [0, 0, 0]]

[[0, 0, 0] [0, 0, 0] [0, 0, 0]]

]

torch.ones

Creates an array filled entirely with 1, the usage is identical to torch.zeros, and thus won't be elaborated here.

torch.empty

Creates an uninitialized array, the usage is the same as torch.zeros and will not be elaborated here. Since it is uninitialized, the memory area may contain residual data, and the values of the elements are indeterminate.

Copy Functions

Additionally, the above three functions have corresponding prototype copy functions:

torch.ones_like(input, *, dtype=None, layout=None, device=None, requires_grad=False, memory_format=torch.preserve_format) → Tensor

torch.zeros_like(input, *, dtype=None, layout=None, device=None, requires_grad=False, memory_format=torch.preserve_format) → Tensor

torch.empty_like(input, *, dtype=None, layout=None, device=None, requires_grad=False, memory_format=torch.preserve_format) → Tensor

Their utility is to copy a structure of the same shape as the array and fill it with corresponding values.

In the following example, the structure of the array is copied, but the filled value is 1.

var arr = torch.ones_like(torch.zeros(3, 3, 3));

arr.print(style: TensorStringStyle.Numpy);

The code language is C#.

[

[[1, 1, 1] [1, 1, 1] [1, 1, 1]]

[[1, 1, 1] [1, 1, 1] [1, 1, 1]]

[[1, 1, 1] [1, 1, 1] [1, 1, 1]]

]

torch.rand

torch.rand generates a tensor filled with random numbers uniformly distributed in the interval [0,1)

.

The function is defined as follows:

torch.rand(*size, *, generator=None, out=None, dtype=None, layout=torch.strided, device=None, requires_grad=False, pin_memory=False) → Tensor

For example, to generate a random number of size 2*3 in the range of [0,1), using C# code:

var arr = torch.rand(2,3);

arr.print(style: TensorStringStyle.Numpy);

[[0.60446, 0.058962, 0.65601] [0.58197, 0.76914, 0.16542]]

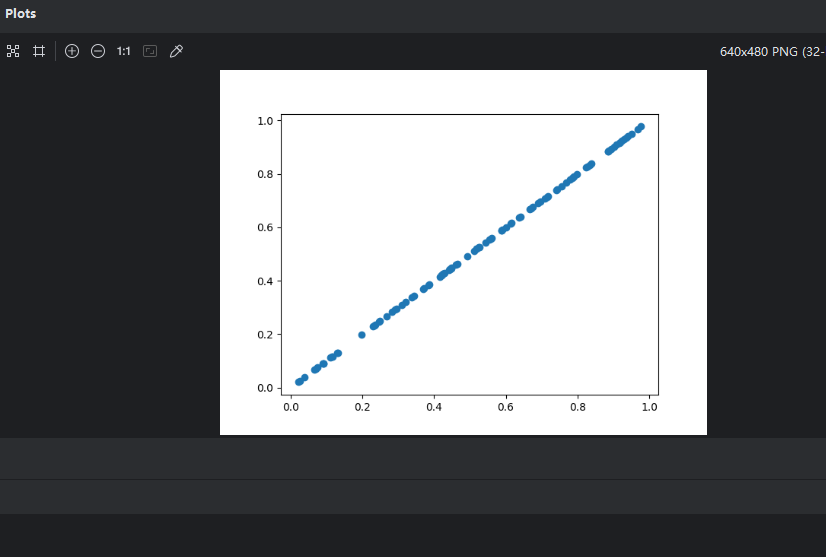

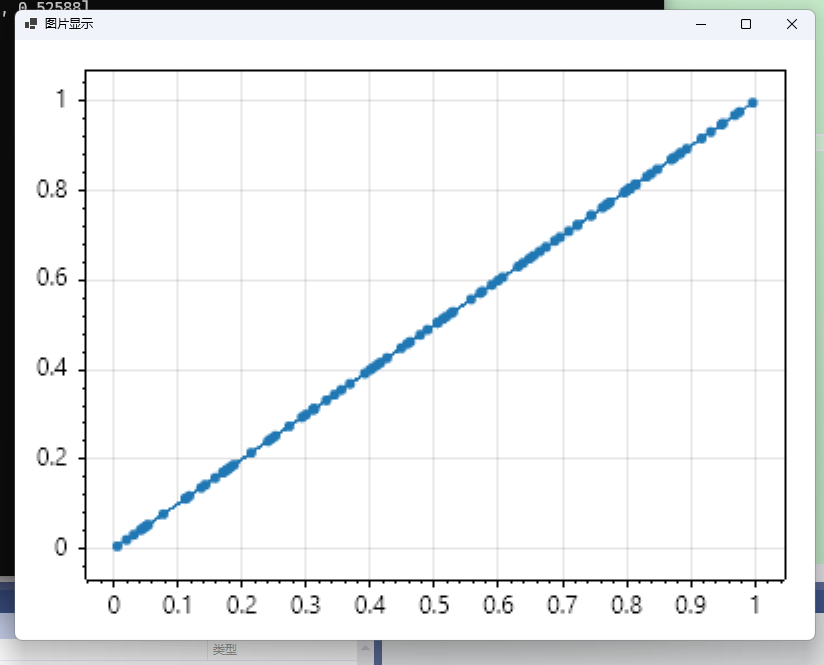

Since C# graphics libraries are not as simple and convenient as Python's matplotlib, readers can reference the two packages Maomi.Torch.ScottPlot and Maomi.ScottPlot.Winforms, which allow quick conversion of Pytorch types and generation of drawing windows. Below is an example using C# code to plot uniformly distributed random numbers, facilitating the display of graphics using both Python's matplotlib and the Maomi.ScottPlot.Winforms framework.

Python:

import torch

import matplotlib.pyplot as plt

arr = torch.rand(100, dtype=torch.float)

print(arr)

x = arr.numpy()

y = x

plt.scatter(x,y)

plt.show()

C#:

using Maomi.Torch;

using Maomi.Plot;

using TorchSharp;

var x = torch.rand(100);

x.print(style: TensorStringStyle.Numpy);

ScottPlot.Plot myPlot = new();

myPlot.Add.Scatter(x, x);

var form = myPlot.Show(400, 300);

As can be seen from the graph, the generated random numbers are uniformly distributed within the range of [0,1).

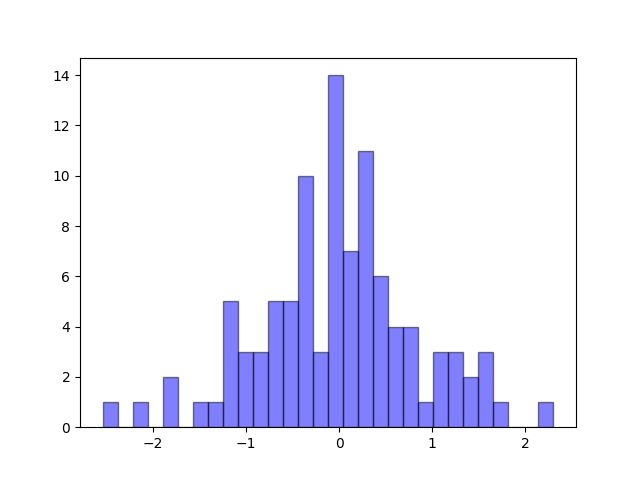

torch.randn

Generates random samples from a standard normal distribution (mean 0, variance 1) of given shape. The range of random samples is [0,1).

The definition is as follows:

torch.randn(*size, *, generator=None, out=None, dtype=None, layout=torch.strided, device=None, requires_grad=False, pin_memory=False) → Tensor

Official documentation: https://pytorch.ac.cn/docs/stable/generated/torch.randn.html#torch.rand

Since drawing in C# is cumbersome, here is an example written in Python:

import torch

import matplotlib.pyplot as plt

arr = torch.randn(100, dtype=torch.float)

print(arr)

x = arr.numpy()

y = x

plt.hist(x, bins=30, alpha=0.5, color='b', edgecolor='black')

plt.show()

The x-axis represents values, and the y-axis represents counts.

torch.randint

Generates random numbers within a specified range.

The definition is as follows:

torch.randint(low=0, high, size, *, generator=None, out=None, dtype=None, layout=torch.strided, device=None, requires_grad=False) → Tensor

For example, to generate 10 elements in the range of 0-100, arranged in a structure of 5*2, using C# code:

var arr = torch.randint(low: 0, high: 100, size: new int[] { 5, 2 });

arr.print(style: TensorStringStyle.Numpy);

[[17, 46] [89, 52] [10, 89] [80, 91] [52, 91]]

If you want to generate floating-point numbers in a certain range, you can use torch.rand, but since torch.rand generates numbers in the range of [0,1), you need to multiply by a factor yourself. For example, to generate random numbers in [0,100).

var arr = torch.rand(size: 100, dtype: torch.ScalarType.Float32) * 100;

arr.print(style: TensorStringStyle.Numpy);

torch.arange

Specifies a range and step size to evenly extract elements to generate an array.

The definition is as follows:

torch.arange(start=0, end, step=1, *, out=None, dtype=None, layout=torch.strided, device=None, requires_grad=False) → Tensor

For example, to generate an array like [0,1,2,3,4,5,6,7,8,9], you can use:

var arr = torch.arange(start: 0, stop: 10, step: 1);

arr.print(style: TensorStringStyle.Numpy);

If you change the step size to 0.5.

var arr = torch.arange(start: 0, stop: 10, step: 0.5);

[0.0000, 0.5000, 1.0000, 1.5000, 2.0000, 2.5000, 3.0000, 3.5000, 4.0000,

4.5000, 5.0000, 5.5000, 6.0000, 6.5000, 7.0000, 7.5000, 8.0000, 8.5000,

9.0000, 9.5000]

Array Operations and Calculations

Axes

In Pytorch, it often uses the dim (dimension) parameter to represent axes, where axes refer to the number of layers of a tensor.

Consider the following array:

[[ 1, 2, 3 ], { 4, 5, 6 }]

If we denote a = [1,2,3] and b = [4,5,6], then:

[a,b]

Then when we want to acquire a, we have dim(a) = 0 and dim(b) = 1.

var arr = torch.from_array(new[,] { { 1, 2, 3 }, { 4, 5, 6 } });

arr.print(style: TensorStringStyle.Numpy);

// Print dimensions

arr.shape.print();

var a = arr[0];

a.print();

var b = arr[1];

b.print();

[[1, 2, 3] [4, 5, 6]]

[2, 3]

[3], type = Int32, device = cpu 1 2 3

[3], type = Int32, device = cpu 4 5 6

Here we can understand in two steps. Since the array is a 2*3 array, we can print it out using .shape.print().

Since the first layer has two elements, we can use Tensor[i] to access the i-th element of the first layer, where i<2.

Similarly, since a and b each have 3 elements in the next layer, the second layer has n<3.

For instance, to extract the elements 3 and 6 from the array.

In C#, you can write it like this, but it cannot be printed using TensorStringStyle.Numpy, or it won't print out.

var arr = torch.from_array(new[,] { { 1, 2, 3 }, { 4, 5, 6 } });

var a = arr[0, 2];

a.print(style: TensorStringStyle.CSharp);

var b = arr[1, 2];

b.print(style: TensorStringStyle.CSharp);

Similarly, if the array has three layers, you can obtain the elements 3 and 6 like this:

var arr = torch.from_array(new[, ,] { { { 1, 2, 3 } }, { { 4, 5, 6 } } });

var a = arr[0, 0, 2];

a.print(style: TensorStringStyle.CSharp);

var b = arr[1, 0, 2];

b.print(style: TensorStringStyle.CSharp);

If you want to extract a portion of elements, TorchCsharp can use the a[i..j] syntax to slice, as illustrated below.

var arr = torch.from_array(new int[] { 1, 2, 3 });

arr = arr[0..2];

arr.print(style: TensorStringStyle.Numpy);

[1, 2]

Array Sorting

Pytorch has several sorting functions:

sort: Sorts the elements of the input tensor along the specified dimension in ascending order by value.

argsort: It performs an indirect sort along the specified axis, which will not be discussed here.

msort: Sorts the input tensor by value in ascending order along its first dimension. torch.msort(t) is equivalent to torch.sort(t, dim=0).

sort can be in either descending or ascending order, with the parameter description as follows:

torch.sort(input, dim=-1, descending=False, stable=False, *, out=None)

- input (Tensor) - The input tensor.

- dim (int, optional) - The dimension to sort.

- descending (bool, optional) - Controls the sort order (ascending or descending).

- stable (boo, optional) - Makes the sorting routine stable to ensure the order of equivalent elements is preserved.

Example:

var arr = torch.arange(start: 0, stop: 10, step: 1);

// or use torch.sort(arr, descending: true)

(torch.Tensor Values, torch.Tensor Indices) a1 = arr.sort(descending: true);

a1.Values.print(style: TensorStringStyle.Numpy);

[9, 8, 7, ... 2, 1, 0]

Values is the result after sorting, and Indices holds the sorting rule.

If the array structure is more complex, by default, without setting parameters, only the innermost array is sorted. As shown in the following code, there are two layers of arrays.

var arr = torch.from_array(new[,] { { 4, 6, 5 }, { 8, 9, 7 }, { 3, 2, 1 } });

(torch.Tensor Values, torch.Tensor Indices) a1 = arr.sort();

a1.Values.print(style: TensorStringStyle.Numpy);

a1.Indices.print(style: TensorStringStyle.Numpy);

[[4, 5, 6] [7, 8, 9] [1, 2, 3]]

[[0, 2, 1] [2, 0, 1] [2, 1, 0]]

Indices will record the positions of the current elements in the previous sorting.

When setting arr.sort(dim: 0);, it sorts according to the first layer.

[[3, 2, 1] [4, 6, 5] [8, 9, 7]]

[[2, 2, 2] [0, 0, 0] [1, 1, 1]]

When setting arr.sort(dim: 1);, only the innermost layer is sorted.

[[4, 5, 6] [7, 8, 9] [1, 2, 3]]

[[0, 2, 1] [2, 0, 1] [2, 1, 0]]

When a tensor has a higher dimension, we can sort it layer by layer like this.

var arr = torch.from_array(new[, ,] { { { 4, 6, 5 }, { 8, 9, 7 }, { 3, 2, 1 } } });

var dimCount = arr.shape.Length;

for (int i = dimCount - 1; i >= 0; i--)

{

(torch.Tensor Values, torch.Tensor Indices) a1 = arr.sort(dim: i);

arr = a1.Values;

arr.print(style: TensorStringStyle.Numpy);

}

[[[1, 2, 3] [4, 5, 6] [7, 8, 9]]]

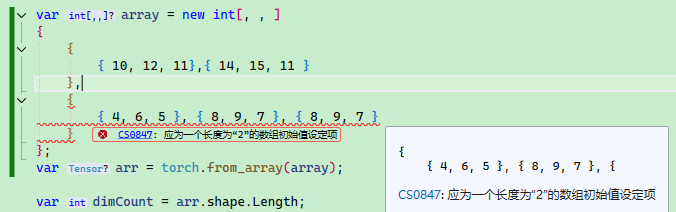

C# multidimensional arrays are not as convenient as Python; every layer must have a consistent number of elements.

For example, the array declaration below is correct:

var array = new int[, , ]

{

{

{ 10, 12, 11},{ 14, 15, 11 }

},

{

{ 4, 6, 5 }, { 8, 9, 7 }

}

};

If the number of elements in the layers is inconsistent, an error will be thrown:

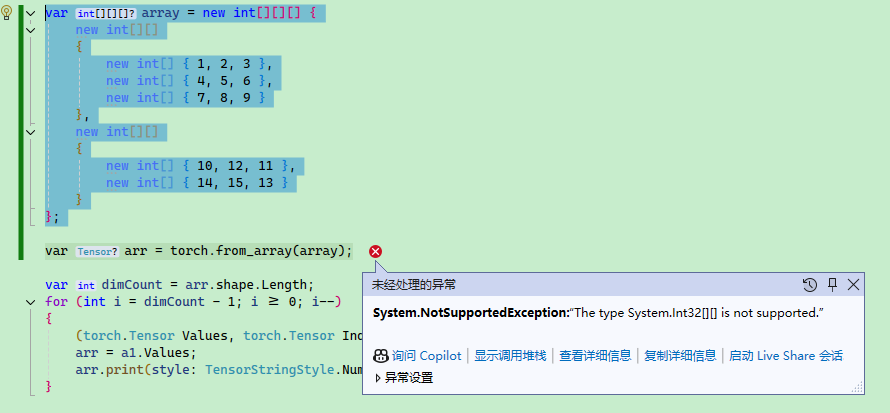

Additionally, note that C# has multidimensional arrays and jagged arrays, as shown below; TorchSharp does not support jagged arrays. A multidimensional array is an array, while a jagged array is an array of arrays or an array of arrays of arrays, so it is crucial to distinguish between the two.

var array = new int[][][]

{

new int[][]

{

new int[] { 1, 2, 3 },

new int[] { 4, 5, 6 },

new int[] { 7, 8, 9 }

},

new int[][]

{

new int[] { 10, 12, 11 },

new int[] { 14, 15, 13 }

}

};

Array Operators

In PyTorch, tensors support many operators, with some arithmetic operators listed below for clarification:

Arithmetic Operators

+: Addition, e.g.,a + b-: Subtraction, e.g.,a - b*: Element-wise multiplication, e.g.,a * b/: Element-wise division, e.g.,a / b//: Element-wise floor division, e.g.,a // b, not supported by TorchCsharp.%: Modulus operation, e.g.,a % b**: Power operation, e.g.,a ** b, not supported by TorchCsharp; use.pow(x)instead.

Logical Operators

==: Element-wise equality comparison, e.g.,a == b!=: Element-wise inequality comparison, e.g.,a != b>: Element-wise greater than comparison, e.g.,a > b<: Element-wise less than comparison, e.g.,a < b>=: Element-wise greater than or equal to comparison, e.g.,a >= b<=: Element-wise less than or equal to comparison, e.g.,a <= b

Bitwise Operators

&: Bitwise AND operation, e.g.,a & b|: Bitwise OR operation, e.g.,a | b^: Bitwise XOR operation, e.g.,a ^ b~: Bitwise NOT operation, e.g.,~a<<: Bitwise left shift, e.g.,a << b>>: Bitwise right shift, e.g.,a >> b

Indexing and Slicing

[i]: Indexing operator, e.g.,a[i][i:j]: Slicing operator, e.g.,a[i:j], TorchCsharp usesa[i..j]syntax.[i, j]: Multi-dimensional indexing operator, e.g.,a[i, j]

For example, to multiply every element in a tensor by 10.

var arr = torch.from_array(new int[] { 1, 2, 3 });

arr = arr * 10;

arr.print(style: TensorStringStyle.Numpy);

[10, 20, 30]

Moreover, PyTorch has many functions, which we will gradually learn about in subsequent chapters.

文章评论